基于ResNet-152模型的172种食物图像分类

图像分类项目,使用ResNet网络结构。使用VireoFood-172数据集,本次项目重点在于数据集与数据集生成器的自定义,以此来使模型更加灵活也有助于模型快速收敛。

转载自AI Studio 项目链接

https://aistudio.baidu.com/aistudio/projectdetail/3395323?contributionType=1&shared=1

一、项目背景和项目创意

- 由于计算机视觉技术在监控摄像头、医疗保健等许多领域的应用越来越多。食品识别是其中一个重要的领域,由于其现实意义和科学挑战,值得进一步研究。

- 最近,卷积神经网络(CNN)被用于食品识别。食物识别方法使用CNN模型提取食物图像特征,计算食物图像特征的相似度,并使用分类技术训练分类器来完成食物识别。

- 此项目利用了VireoFood-172 数据集,其中包含了来自172个类别的110241张食品图片,并根据353种配料手工标注,以此来进行训练和测试。

二、项目搭建

Step1:准备数据

(1)数据集介绍

- 本项目基于paddlepaddle,利用VireoFood-172 数据集进行相关开发,此数据集将食品分为172个大类,每大类中有200-1k张从从百度和谷歌图像搜索中抓取的食品图片,基本覆盖日常生活中的绝大多数食品种类。数据集数量庞大,质量较高,能够满足深度学习的训练要求。

(2)对数据集进行解压

!unzip -q -o data/data124758/splitedDataset.zip

(3)对数据集进行处理

- 拿到数据集之后首先对数据集进行处理,按8:2的比例切割数据集之后进行随机打乱

(4)引入项目必须的模块

import paddle

import numpy as np

import os

(5)利用生成器获得训练集和测试集

- 引入自己编写的生成器代码FoodDataset

- 训练集:train_dataset = FoodDataset(train_data)

- 测试集:eval_dataset = FoodDataset(validation_data)

- train大小: 88192

- eval大小: 22049

- 符合按8:2的比例切割数据集

from dataset import FoodDataset

train_data = './train_data1.txt'

validation_data = './valida_data1.txt'

train_dataset = FoodDataset(train_data)

eval_dataset = FoodDataset(validation_data)

print('train大小:', train_dataset.__len__())

print('eval大小:', eval_dataset.__len__())

# for data, label in train_dataset:

# print(data)

# print(np.array(data).shape)

# print(label)

# break

train大小: 88192

eval大小: 22049

class DenseFoodModel(paddle.nn.Layer):

def __init__(self):

super(DenseFoodModel, self).__init__()

self.num_labels = 172

def dense_block(self, x, blocks, name):

for i in range(blocks):

x = self.conv_block(x, 32, name=name + '_block' + str(i + 1))

return x

def transition_block(self, x, reduction, name):

bn_axis = 3

x = paddle.nn.BatchNorm2D(num_features=x.shape[1], epsilon=1.001e-5)(x)

x = paddle.nn.ELU(name=name + '_elu')(x)

x = paddle.nn.Conv2D(in_channels=x.shape[1], out_channels=int(x.shape[1] * reduction),

kernel_size=1, bias_attr=False)(x)

x = paddle.nn.MaxPool2D(kernel_size=2, stride=2, name=name + '_pool')(x)

return x

def conv_block(self, x, growth_rate, name):

bn_axis = 3

x1 = paddle.nn.BatchNorm2D(num_features=x.shape[1], epsilon=1.001e-5)(x)

x1 = paddle.nn.ELU(name=name + '_0_elu')(x1)

x1 = paddle.nn.Conv2D(in_channels=x1.shape[1], out_channels=4 * growth_rate, kernel_size= 1, bias_attr=False)(x1)

x1 = paddle.nn.BatchNorm2D(num_features=x1.shape[1], epsilon=1.001e-5)(x1)

x1 = paddle.nn.ELU(name=name + '_1_elu')(x1)

x1 = paddle.nn.Conv2D(in_channels=x1.shape[1], out_channels=growth_rate, kernel_size=3, padding='SAME',

bias_attr=False)(x1)

# x = np.concatenate(([x, x1]), axis=1)

# x = paddle.to_tensor(x)

x = paddle.concat(x=[x, x1], axis=1)

return x

def forward(self, input):

# img_input = paddle.reshape(input, shape=[-1, 3, 224, 224]) # 转换维读

bn_axis = 3

x = paddle.nn.Conv2D(in_channels=3, out_channels=64, kernel_size=7, stride=2, bias_attr=False, padding=3)(input)

x = paddle.nn.BatchNorm2D(

num_features=64, epsilon=1.001e-5)(x)

x = paddle.nn.ELU(name='conv1/elu')(x)

x = paddle.nn.MaxPool2D(kernel_size=3, stride=2, name='pool1', padding=1)(x)

x = self.dense_block(x, 6, name='conv2')

x = self.transition_block(x, 0.5, name='pool2')

x = self.dense_block(x, 12, name='conv3')

x = self.transition_block(x, 0.5, name='pool3')

x = self.dense_block(x, 24, name='conv4')

x = self.transition_block(x, 0.5, name='pool4')

x = self.dense_block(x, 16, name='conv5')

x = paddle.nn.BatchNorm2D(

num_features=x.shape[1], epsilon=1.001e-5)(x)

x = paddle.nn.ELU(name='elu')(x)

x = paddle.nn.AdaptiveAvgPool2D(output_size=1)(x)

x = paddle.squeeze(x, axis=[2, 3])

x = paddle.nn.Linear(in_features=1024, out_features=173)(x)

x = F.softmax(x)

return x

import paddle.nn.functional as F

model = paddle.Model(DenseFoodModel())

model.summary((-1, ) + tuple([3, 224, 224]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:653: UserWarning: When training, we now always track global mean and variance.

"When training, we now always track global mean and variance.")

----------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

============================================================================

DenseFoodModel-1 [[1, 3, 224, 224]] [1, 173] 0

============================================================================

Total params: 0

Trainable params: 0

Non-trainable params: 0

----------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 0.00

Params size (MB): 0.00

Estimated Total Size (MB): 0.58

----------------------------------------------------------------------------

{'total_params': 0, 'trainable_params': 0}

Step2.网络配置

(1)网络搭建

- 此项目采用paddlepaddle自带的ResNet残差网络模型,ResNet残差模型快如下图所示:

-

x为残差块的输入,然后复制成两部分,一部分输入到层(weight layer)之中,进行层间的运算(相当于将x输入到一个函数中做映射),结果为f(x);另一部分作为分支结构,输出还是原本的x,最后将分别两部分的输出进行叠加:f(x) + x,再通过激活函数。这便是整个残差块的基本结构。

-

下图是每种ResNet的具体结构:

-

这里介绍一下ResNet152,152是指152次卷积。

-

其中block共有3+8+36+3 = 50个,每个block是由3层卷积构成的,共150个卷积,最开始的一个卷积是将3通道的图片提取特征,最后一层是自适应平均池化,输出维度为1。

-

一开始选用的是ResNet50图像分类模型,但是在进行了100多轮迭代训练后,正确率只能维持在88%左右,因此我们尝试变换模型重新进行训练,选用了ResNet101模型和ResNet152模型分别进行100轮迭代训练,最终,ResNet101模型的训练集正确率88%,测试集正确率80%,ResNet152模型训练集正确率达到了92%,测试集正确率达到了82%。

network = paddle.vision.models.resnet152(num_classes=173, pretrained=True)

model = paddle.Model(network)

model.summary((-1, ) + tuple([3, 224, 224]))

100%|██████████| 355826/355826 [00:09<00:00, 38920.04it/s]

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1441: UserWarning: Skip loading for fc.weight. fc.weight receives a shape [2048, 1000], but the expected shape is [2048, 173].

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1441: UserWarning: Skip loading for fc.bias. fc.bias receives a shape [1000], but the expected shape is [173].

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-1 [[1, 3, 224, 224]] [1, 64, 112, 112] 9,408

BatchNorm2D-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 256

ReLU-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 0

MaxPool2D-1 [[1, 64, 112, 112]] [1, 64, 56, 56] 0

Conv2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-4 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-4 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-5 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-5 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Conv2D-2 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-1 [[1, 64, 56, 56]] [1, 256, 56, 56] 0

Conv2D-6 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-6 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-3 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-8 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-8 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-9 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-9 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-4 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-11 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-11 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-3 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-13 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768

BatchNorm2D-13 [[1, 128, 56, 56]] [1, 128, 56, 56] 512

ReLU-5 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-14 [[1, 128, 56, 56]] [1, 128, 28, 28] 147,456

BatchNorm2D-14 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-15 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-15 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Conv2D-12 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072

BatchNorm2D-12 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-4 [[1, 256, 56, 56]] [1, 512, 28, 28] 0

Conv2D-16 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-16 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-6 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-18 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-18 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-5 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-19 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-19 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-7 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-21 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-21 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-6 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-22 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-22 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-8 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-24 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-24 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-7 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-25 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-25 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-9 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-26 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-26 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-27 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-27 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-8 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-28 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-28 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-10 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-29 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-29 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-30 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-30 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-9 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-31 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-31 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-11 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-32 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-32 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-33 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-33 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-10 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-34 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-34 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-12 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-35 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-35 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-36 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-36 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-11 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-38 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072

BatchNorm2D-38 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024

ReLU-13 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-39 [[1, 256, 28, 28]] [1, 256, 14, 14] 589,824

BatchNorm2D-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-40 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Conv2D-37 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288

BatchNorm2D-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-12 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0

Conv2D-41 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-41 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-14 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-42 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-42 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-43 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-43 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-13 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-44 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-44 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-15 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-45 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-45 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-46 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-46 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-14 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-47 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-47 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-16 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-48 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-48 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-49 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-49 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-15 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-50 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-50 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-17 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-51 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-51 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-52 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-52 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-16 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-53 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-53 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-18 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-54 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-54 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-55 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-55 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-17 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-56 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-56 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-19 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-57 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-57 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-58 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-58 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-18 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-59 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-59 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-20 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-60 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-60 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-61 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-61 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-19 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-62 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-62 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-21 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-63 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-63 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-64 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-64 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-20 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-65 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-65 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-22 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-66 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-66 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-67 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-67 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-21 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-68 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-68 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-23 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-69 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-69 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-70 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-70 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-22 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-71 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-71 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-24 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-72 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-72 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-73 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-73 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-23 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-74 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-74 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-25 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-75 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-75 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-76 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-76 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-24 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-77 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-77 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-26 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-78 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-78 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-79 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-79 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-25 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-80 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-80 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-27 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-81 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-81 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-82 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-82 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-26 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-83 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-83 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-84 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-84 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-85 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-85 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-27 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-86 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-86 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-29 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-87 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-87 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-88 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-88 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-89 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-89 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-30 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-90 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-90 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-91 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-91 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-29 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-92 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-92 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-93 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-93 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-94 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-94 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-30 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-95 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-95 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-32 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-96 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-96 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-97 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-97 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-98 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-98 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-33 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-99 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-99 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-100 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-100 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-32 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-101 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-101 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-102 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-102 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-103 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-103 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-33 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-104 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-104 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-35 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-105 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-105 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-106 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-106 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-107 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-107 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-36 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-108 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-108 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-109 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-109 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-35 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-110 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-110 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-111 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-111 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-112 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-112 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-36 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-113 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-113 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-38 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-114 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-114 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-115 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-115 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-116 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-116 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-39 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-117 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-117 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-118 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-118 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-38 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-119 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-119 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-120 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-120 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-121 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-121 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-39 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-122 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-122 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-41 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-123 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-123 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-124 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-124 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-125 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-125 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-42 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-126 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-126 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-127 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-127 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-41 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-128 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-128 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-43 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-129 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-129 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-130 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-130 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-42 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-131 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-131 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-44 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-132 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-132 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-133 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-133 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-43 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-134 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-134 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-45 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-135 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-135 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-136 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-136 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-44 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-137 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-137 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-46 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-138 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-138 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-139 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-139 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-45 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-140 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-140 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-47 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-141 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-141 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-142 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-142 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-46 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-143 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-143 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-48 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-144 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-144 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-145 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-145 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-47 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-147 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288

BatchNorm2D-147 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048

ReLU-49 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-148 [[1, 512, 14, 14]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-148 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-149 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-149 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Conv2D-146 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152

BatchNorm2D-146 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-48 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0

Conv2D-150 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-150 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-50 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-151 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-151 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-152 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-152 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-49 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-153 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-153 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-51 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-154 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-154 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-155 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-155 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-50 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AdaptiveAvgPool2D-1 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0

Linear-1 [[1, 2048]] [1, 173] 354,477

===============================================================================

Total params: 58,649,709

Trainable params: 58,346,861

Non-trainable params: 302,848

-------------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 552.42

Params size (MB): 223.73

Estimated Total Size (MB): 776.72

-------------------------------------------------------------------------------

{'total_params': 58649709, 'trainable_params': 58346861}

(2)定义损失函数和准确率

- 这次使用的是交叉熵损失函数,该函数在分类任务上比较常用。

- 定义了一个损失函数之后,还有对它求平均值,因为定义的是一个Batch的损失值。

- 同时我们还可以定义一个准确率函数,这个可以在我们训练的时候输出分类的准确率。

(3)定义优化方法

- 这次我们使用的是Adam优化方法,同时指定学习率为0.001

Step3.训练模型与训练评估

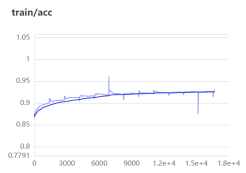

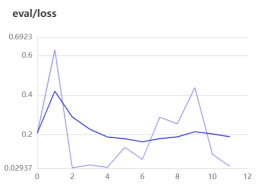

分为三段式来训练样本:

- 0-19epoch 学习率0.01

-

训练集:loss迅速下降,正确率经过二十轮迭代上升至0.80929

-

测试集:loss迅速下降,正确率迅速上升至0.7384

- 从0-19的16开始断点续训14个epoch,学习率为0.001

-

训练集:loss震荡下降,正确率从0.80929上升至0.85714

-

loss震荡,正确率从0.7384上升至0.7592

- 选取16+14=30的数据,断点续训11个epoch,学习率为0.0001

-

训练集:loss震荡下降,正确率从0.85714上升至0.92927

-

测试集:正确率趋于平稳,正确率取最高值0.82684,即第7个epoch

考虑到训练集持续上升而测试集接近收敛,继续训练会导致过拟合,故训练到此结束。

model.prepare(optimizer=paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters()),

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy())

# 训练可视化VisualDL工具的回调函数

visualdl = paddle.callbacks.VisualDL(log_dir='visualdl_log')

# 启动模型全流程训练

model.fit(train_dataset, # 训练数据集

eval_dataset, # 评估数据集

epochs=20, # 总的训练轮次

batch_size=64, # 批次计算的样本量大小

shuffle=True, # 是否打乱样本集

verbose=1, # 日志展示格式

save_dir='0_20_resnet152_0.001', # 分阶段的训练模型存储路径

callbacks=[visualdl]) # 回调函数使用

The loss value printed in the log is the current step, and the metric is the average value of previous steps.

Epoch 1/20

step 1378/1378 [==============================] - loss: 2.0446 - acc: 0.2861 - 538ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/0

Eval begin...

step 345/345 [==============================] - loss: 1.2795 - acc: 0.3776 - 256ms/step

Eval samples: 22049

Epoch 2/20

step 1378/1378 [==============================] - loss: 1.5286 - acc: 0.5133 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/1

Eval begin...

step 345/345 [==============================] - loss: 0.7893 - acc: 0.5104 - 239ms/step

Eval samples: 22049

Epoch 3/20

step 1378/1378 [==============================] - loss: 1.1590 - acc: 0.5851 - 524ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/2

Eval begin...

step 345/345 [==============================] - loss: 0.6739 - acc: 0.5879 - 240ms/step

Eval samples: 22049

Epoch 4/20

step 1378/1378 [==============================] - loss: 1.1931 - acc: 0.6275 - 522ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/3

Eval begin...

step 345/345 [==============================] - loss: 0.2853 - acc: 0.6071 - 237ms/step

Eval samples: 22049

Epoch 5/20

step 1378/1378 [==============================] - loss: 1.3321 - acc: 0.6558 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/4

Eval begin...

step 345/345 [==============================] - loss: 0.5777 - acc: 0.6388 - 242ms/step

Eval samples: 22049

Epoch 6/20

step 1378/1378 [==============================] - loss: 1.4460 - acc: 0.6820 - 524ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/5

Eval begin...

step 345/345 [==============================] - loss: 0.5820 - acc: 0.6541 - 244ms/step

Eval samples: 22049

Epoch 7/20

step 1378/1378 [==============================] - loss: 1.0251 - acc: 0.6960 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/6

Eval begin...

step 345/345 [==============================] - loss: 0.3825 - acc: 0.6865 - 239ms/step

Eval samples: 22049

Epoch 8/20

step 1378/1378 [==============================] - loss: 0.8302 - acc: 0.7145 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/7

Eval begin...

step 345/345 [==============================] - loss: 0.0702 - acc: 0.6644 - 240ms/step

Eval samples: 22049

Epoch 9/20

step 1378/1378 [==============================] - loss: 1.0031 - acc: 0.7274 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/8

Eval begin...

step 345/345 [==============================] - loss: 0.3258 - acc: 0.6950 - 240ms/step

Eval samples: 22049

Epoch 10/20

step 1378/1378 [==============================] - loss: 0.9562 - acc: 0.7366 - 522ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/9

Eval begin...

step 345/345 [==============================] - loss: 0.2758 - acc: 0.7111 - 240ms/step

Eval samples: 22049

Epoch 11/20

step 1378/1378 [==============================] - loss: 0.9920 - acc: 0.7492 - 524ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/10

Eval begin...

step 345/345 [==============================] - loss: 0.1771 - acc: 0.7077 - 240ms/step

Eval samples: 22049

Epoch 12/20

step 1378/1378 [==============================] - loss: 0.8259 - acc: 0.7558 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/11

Eval begin...

step 345/345 [==============================] - loss: 0.1928 - acc: 0.7075 - 241ms/step

Eval samples: 22049

Epoch 13/20

step 1378/1378 [==============================] - loss: 0.8234 - acc: 0.7649 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/12

Eval begin...

step 345/345 [==============================] - loss: 0.4049 - acc: 0.7215 - 243ms/step

Eval samples: 22049

Epoch 14/20

step 1378/1378 [==============================] - loss: 0.7254 - acc: 0.7730 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/13

Eval begin...

step 345/345 [==============================] - loss: 0.3297 - acc: 0.7173 - 239ms/step

Eval samples: 22049

Epoch 15/20

step 1378/1378 [==============================] - loss: 0.7475 - acc: 0.7817 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/14

Eval begin...

step 345/345 [==============================] - loss: 0.1445 - acc: 0.7199 - 240ms/step

Eval samples: 22049

Epoch 16/20

step 1378/1378 [==============================] - loss: 0.7856 - acc: 0.7905 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/15

Eval begin...

step 345/345 [==============================] - loss: 0.3110 - acc: 0.7332 - 238ms/step

Eval samples: 22049

Epoch 17/20

step 1378/1378 [==============================] - loss: 1.0853 - acc: 0.7945 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/16

Eval begin...

step 345/345 [==============================] - loss: 0.2105 - acc: 0.7461 - 240ms/step

Eval samples: 22049

Epoch 18/20

step 1378/1378 [==============================] - loss: 1.1084 - acc: 0.8027 - 523ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/17

Eval begin...

step 345/345 [==============================] - loss: 0.1809 - acc: 0.7313 - 241ms/step

Eval samples: 22049

Epoch 19/20

step 1378/1378 [==============================] - loss: 1.1126 - acc: 0.8079 - 525ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/18

Eval begin...

step 345/345 [==============================] - loss: 0.0287 - acc: 0.7268 - 241ms/step

Eval samples: 22049

Epoch 20/20

step 1378/1378 [==============================] - loss: 0.6276 - acc: 0.8125 - 524ms/step

save checkpoint at /home/aistudio/0_20_resnet152_0.001/19

Eval begin...

step 345/345 [==============================] - loss: 0.2583 - acc: 0.7326 - 240ms/step

Eval samples: 22049

save checkpoint at /home/aistudio/0_20_resnet152_0.001/final

# visualdl --logdir=visualdl_log/ --port=8040

# 终端运行此代码

File "/tmp/ipykernel_101/1350602942.py", line 1

visualdl --logdir=visualdl_log/ --port=8080

^

SyntaxError: can't assign to operator

model.save('infer/mnist',training=False) # 保存模型

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

/tmp/ipykernel_129/3232998588.py in <module>

----> 1 model.save('infer/mnist',training=False) # 保存模型

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model.py in save(self, path, training)

1234 if ParallelEnv().local_rank == 0:

1235 if not training:

-> 1236 self._save_inference_model(path)

1237 else:

1238 self._adapter.save(path)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model.py in _save_inference_model(self, path)

1981 if self._input_info is None: # No provided or inferred

1982 raise RuntimeError(

-> 1983 "Saving inference model needs 'inputs' or running before saving. Please specify 'inputs' in Model initialization or input training data and perform a training for shape derivation."

1984 )

1985 if self._is_shape_inferred:

RuntimeError: Saving inference model needs 'inputs' or running before saving. Please specify 'inputs' in Model initialization or input training data and perform a training for shape derivation.

Step5.模型预测

- 读取模型参数

- 对预测图片进行预处理

- 开始预测

from PIL import Image

import paddle.vision.transforms as T

model_state_dict = paddle.load('50epoches_chk/final.pdparams') # 读取模型

model = paddle.vision.models.resnet50(num_classes=173)

model.set_state_dict(model_state_dict)

model.eval()

image_file = './splitedDataset/train/108/5_41.jpg'

# image_file = './splitedDataset/Yu-Shiang Shredded Pork.webp'

# braised pork in brown sauce.jfif \ 1.webp \ rice.jfif \ Yu-Shiang Shredded Pork.webp

transforms = T.Compose([

T.RandomResizedCrop((224, 224)), # 随机裁剪大小,裁剪地方不同等于间接增加了数据样本 300*300-224*224

T.ToTensor(), # 数据的格式转换和标准化 HWC => CHW

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) # 图像归一化

])

img = Image.open(image_file) # 读取图片

if img.mode != 'RGB':

img = img.convert('RGB')

img = transforms(img)

img = paddle.unsqueeze(img, axis=0)

foodLabel = './FoodList.txt'

foodList = []

with open(foodLabel) as f:

for line in f.readlines():

info = line.split('\t')

if len(info) > 0:

foodList.append(info)

ceshi = model(img) # 测试

print('预测的结果为:', np.argmax(ceshi.numpy()), foodList[np.argmax(ceshi.numpy())-1]) # 获取值

Image.open(image_file) # 显示图片

预测的结果为: 108 ['Four-Joy Meatballs\n']

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-gnfdVBa3-1646533414153)(output_20_1.png)]](https://i-blog.csdnimg.cn/blog_migrate/dbde4d35467d45f795ec234c41a0d7a1.png)

断点续训:

在训练初期,希望训练速度快,使用0.01的学习率。在此基础上需要更小的学习率继续训练,以获得更准确的正确率。

- 读取模型参数

- 读取优化器参数

- 对预测图片进行预处理

- 开始预测

import paddle.vision.transforms as T

import paddle

from dataset import FoodDataset

train_data = './train_data1.txt'

validation_data = './valida_data1.txt'

train_dataset = FoodDataset(train_data)

eval_dataset = FoodDataset(validation_data)

network = paddle.vision.models.resnet101(num_classes=173)

params_dict = paddle.load('15_15_resnet101_0.0001/final.pdparams')

network.set_state_dict(params_dict)

model = paddle.Model(network)

opt = paddle.optimizer.Adam(learning_rate=0.0001, parameters=model.parameters())

opt_dict = paddle.load('15_15_resnet101_0.0001/final.pdopt')

opt.set_state_dict(opt_dict)

model.prepare(optimizer=opt,

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy())

# 训练可视化VisualDL工具的回调函数

visualdl = paddle.callbacks.VisualDL(log_dir='visualdl_log')

# 启动模型全流程训练

model.fit(train_dataset, # 训练数据集

eval_dataset, # 评估数据集

epochs=25, # 总的训练轮次

batch_size=64, # 批次计算的样本量大小

shuffle=True, # 是否打乱样本集

verbose=1, # 日志展示格式

save_dir='30_25_resnet101', # 分阶段的训练模型存储路径

batch_size=64, # 批次计算的样本量大小

shuffle=True, # 是否打乱样本集

verbose=1, # 日志展示格式

save_dir='30_25_resnet101', # 分阶段的训练模型存储路径

callbacks=[visualdl]) # 回调函数使用

W0129 23:08:45.468212 6682 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1

W0129 23:08:45.472950 6682 device_context.cc:465] device: 0, cuDNN Version: 7.6.

The loss value printed in the log is the current step, and the metric is the average value of previous steps.

Epoch 1/25

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:77: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

return (isinstance(seq, collections.Sequence) and

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:653: UserWarning: When training, we now always track global mean and variance.

"When training, we now always track global mean and variance.")

step 30/1378 [..............................] - loss: 0.4691 - acc: 0.8672 - ETA: 10:18 - 459ms/st

三、项目总结

- 实验结果

本次实验基于paddlepaddle,利用VireoFood-172 数据集来实现食品识别。网络模型采用的是paddlepaddle自带的ResNet网络模型,可以解决深层网络梯度消失的问题。

项目分为三段式来训练样本,第一段为0-19epoch 学习率为0.01,第二段为从0-19的16开始断点续训14个epoch,学习率为0.001,第三段选取16+14=30的数据,断点续训11个epoch,学习率为0.0001,训练结束时测试集正确率达到0.82684,训练集正确率达到0.92927。 - 实验分析

项目一开始选用的是ResNet50模型,但是效果并不理想,因此我们尝试变换模型增加模型深度重新进行训练,选用了ResNet101模型和ResNet152模型,发现随着模型深度加深,训练集和测试集的正确率确实有明显的提升。 - 后续计划

此项目测试集的最高正确率也只能达到0.82684,因此在后续的工作中,尝试使用其他的网络模型,或者继续调节网络模型的超参数,以此来提高训练集和测试集的正确率,这是后续的工作计划。

更多推荐

已为社区贡献1437条内容

已为社区贡献1437条内容

所有评论(0)