[PaddleHub]全民健身热潮之AI帮你仰卧起坐计数

转自AI Studio,原文链接:[畊宏女孩]全民健身热潮之AI帮你仰卧起坐计数 - 飞桨AI Studio1.背景介绍自从疫情以来,全民健身横行~ 大家居家锻炼,无疑需要用到卷腹、仰卧起坐等等这些室内健身方法。 为了方便做的时候不要再操心计数的问题,从而诞生了本产品,AI帮你仰卧起坐计数2.实现思路1.用户打开手机APP,将手机固定在场地一侧,适当设置手机角度,根据应用的自动语音提示调整身体与手

·

转自AI Studio,原文链接:

[畊宏女孩]全民健身热潮之AI帮你仰卧起坐计数 - 飞桨AI Studio

1.背景介绍

自从疫情以来,全民健身横行~ 大家居家锻炼,无疑需要用到卷腹、仰卧起坐等等这些室内健身方法。 为了方便做的时候不要再操心计数的问题,从而诞生了本产品,AI帮你仰卧起坐计数

2.实现思路

- 1.用户打开手机APP,将手机固定在场地一侧,适当设置手机角度,根据应用的自动语音提示调整身体与手机距离,直到人体完全位于识别框内,即可开始运动。

- 2.通过PaddleHub的human_pose_estimation_resnet50_mpii模型,进行人体关键点检测。

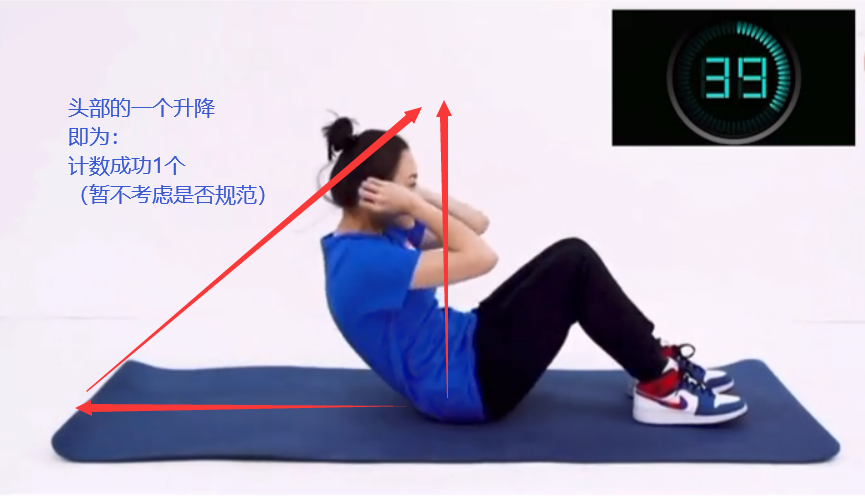

- 3.根据检测的数据计数(此处选择头部关键点进行判断,一次完整的上下为一次仰卧)

二、环境准备

1.PaddleHub安装

In [1]

!pip install -U pip --user >log.log

!pip install -U paddlehub >log.logWARNING: You are using pip version 22.0.4; however, version 22.1 is available. You should consider upgrading via the '/opt/conda/envs/python35-paddle120-env/bin/python -m pip install --upgrade pip' command.

In [ ]

!pip list |grep paddlepaddle2onnx 0.9.5 paddlehub 2.2.0 paddlenlp 2.0.7 paddlepaddle-gpu 2.2.2.post101 tb-paddle 0.3.6

2.human_pose_estimation_resnet50_mpii模型安装

- 模型地址: 飞桨PaddlePaddle-源于产业实践的开源深度学习平台

- 模型概述:人体骨骼关键点检测(Pose Estimation) 是计算机视觉的基础性算法之一,在诸多计算机视觉任务起到了基础性的作用,如行为识别、人物跟踪、步态识别等相关领域。具体应用主要集中在智能视频监控,病人监护系统,人机交互,虚拟现实,人体动画,智能家居,智能安防,运动员辅助训练等等。 该模型的论文《Simple Baselines for Human Pose Estimation and Tracking》由 MSRA 发表于 ECCV18,使用 MPII 数据集训练完成。

In [2]

!hub install human_pose_estimation_resnet50_mpii >log.log[2022-05-12 15:07:19,455] [ INFO] - Successfully installed human_pose_estimation_resnet50_mpii-1.1.1

In [ ]

|human_pose_estimation_res|/home/aistudio/.paddlehub/modules/human_pose_estim|

三、人体关键点检测示例

1.演示视频

自行获取视频

2.关键点检测演示

针对下面这三张图片做关键点检测,具体如下:

In [ ]

import cv2

import paddlehub as hub

pose_estimation = hub.Module(name="human_pose_estimation_resnet50_mpii")

image1=cv2.imread('work/1.png') # 坐直

image2=cv2.imread('work/2.png') # 全躺

image3=cv2.imread('work/3.png') # 中间状态

results = pose_estimation.keypoint_detection(images=[image1,image2,image3], visualization=True)[2022-05-09 16:54:52,291] [ WARNING] - The _initialize method in HubModule will soon be deprecated, you can use the __init__() to handle the initialization of the object W0509 16:54:52.295652 101 analysis_predictor.cc:1350] Deprecated. Please use CreatePredictor instead.

image saved in output_pose/ndarray_time=1652086492754842.jpg image saved in output_pose/ndarray_time=1652086492754871.jpg image saved in output_pose/ndarray_time=1652086492754875.jpg

In [ ]

# 打印关键点

print(results[0]['data'])OrderedDict([('left_ankle', [534, 305]), ('left_knee', [461, 213]), ('left_hip', [380, 289]), ('right_hip', [388, 269]), ('right_knee', [461, 209]), ('right_ankle', [527, 301]), ('pelvis', [388, 277]), ('thorax', [351, 176]), ('upper_neck', [366, 144]), ('head_top', [388, 80]), ('right_wrist', [402, 144]), ('right_elbow', [446, 209]), ('right_shoulder', [351, 184]), ('left_shoulder', [366, 168]), ('left_elbow', [439, 205]), ('left_wrist', [410, 144])])

查看output_pose 下输出的图片:

3.如何判断起身下落

判断一次仰卧起坐的依据是什么呢?根据上面的三张图,可以轻松得出结论,用头部坐标的移动可以作为评判标准。

In [ ]

# 打印三张头部关键点

print(results[0]['data']['head_top'])

print(results[1]['data']['head_top'])

print(results[2]['data']['head_top'])[388, 80] [87, 305] [301, 107]

四、智能计数

改进:采用GPU预测

10秒的视频大概:运行时长: 18秒

70秒的视频大概:运行时长: 70秒

速度起飞~

In [4]

import cv2

import paddlehub as hub

import math

from matplotlib import pyplot as plt

import numpy as np

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

%matplotlib inline

def countYwqz():

pose_estimation = hub.Module(name="human_pose_estimation_resnet50_mpii")

flag = False

count = 0

num = 0

all_num = []

flip_list = []

fps = 60

# 可选择web视频流或者文件

file_name = 'work/ywqz.mp4'

cap = cv2.VideoCapture(file_name)

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# out后期可以合成视频返回

out = cv2.VideoWriter(

'output.mp4',

fourcc,

fps,

(width,height))

while cap.isOpened():

success, image = cap.read()

# print(image)

if not success:

break

image_height, image_width, _ = image.shape

# print(image_height, image_width)

image.flags.writeable = False

results = pose_estimation.keypoint_detection(images=[image], visualization=True, use_gpu=True)

flip = results[0]['data']['head_top'][1] # 获取头部的y轴坐标值

flip_list.append(flip)

all_num.append(num)

num +=1

# 写入视频

img_root="output_pose/"

# 排序,不然是乱序的合成出来

im_names=os.listdir(img_root)

im_names.sort(key=lambda x: int(x.replace("ndarray_time=","").split('.')[0]))

for im_name in range(len(im_names)):

img = img_root+str(im_names[im_name])

print(img)

frame=cv2.imread(img)

out.write(frame)

out.release()

return all_num,flip_list

def get_count(x,y):

count = 0

flag = False

count_list = [0] # 记录极值的y值

for i in range(len(y)-1):

if y[i] <= y[i + 1] and flag == False:

continue

elif y[i] >= y[i + 1] and flag == True:

continue

else:

# 防止附近的轻微抖动也被计入数据

if abs(count_list[-1] - y[i]) >100 or abs(count_list[-1] - y[i-1]) >100 or abs(count_list[-1] - y[i-2]) >100 or abs(count_list[-1] - y[i-3]) >100 or abs(count_list[-1] - y[i+1]) >100 or abs(count_list[-1] - y[i+2]) >100 or abs(count_list[-1] - y[i+3]) >100:

count = count + 1

count_list.append(y[i])

print(x[i])

flag = not flag

return math.floor(count/2)

if __name__ == "__main__":

x,y = countYwqz()

plt.figure(figsize=(8, 8))

count = get_count(x,y)

plt.title(f"point numbers: {count}")

plt.plot(x, y)

plt.show()

1. 计数效果如下

2. 视频生成如下

在根目录下可以看到:

output.mp4

总结

项目借鉴了Javaroom大佬的实现手法,进行了改进和实现,改进了部分代码,增加了代码可读性,并成功完成了仰卧起坐的计数实现。

参考项目:【超简单之50行代码】基于PaddleHub的跳绳AI计数器

个人总结

全网同名:

iterhui

我在AI Studio上获得至尊等级,点亮10个徽章,来互关呀~

更多推荐

已为社区贡献1437条内容

已为社区贡献1437条内容

所有评论(0)