【Padddle打比赛】心电图智能诊断竞赛Baseline-0.6765

【Padddle打比赛】心电图智能诊断竞赛Baseline-0.6765

资源

⭐ ⭐ ⭐ 欢迎点个小小的Star支持!⭐ ⭐ ⭐

开源不易,希望大家多多支持~ ,如果对您有帮助的话

-

更多CV和NLP中的transformer模型(BERT、ERNIE、ViT、DeiT、Swin Transformer等)、深度学习资料,请参考:awesome-DeepLearning

-

飞桨框架相关资料,请参考:飞桨深度学习平台

一、比赛介绍

比赛的地址为:http://ailab.aiwin.org.cn/competitions/64

本案例在比赛的baseline的基础上进行了修改和优化,原来的baseline为:https://aistudio.baidu.com/aistudio/projectdetail/2653802

原来的比赛的baseline只能达到0.64,本案例提升了3.65%,后续会持续优化。

1.1 赛题背景

心电图是临床最基础的一个检查项目,因为安全、便捷成为心脏病诊断的利器。每天都有大量的心电图诊断需求,但是全国范围内诊断心电图的专业医生数量不足,导致很多医院都面临专业心电图医生短缺的情况。

人工智能技术的出现,为改善医生人力资源不足的问题带来了全新的可能。由于心电图数据与诊断的标准化程度较高,相对较易于运用人工智能技术进行智能诊断算法的开发。

由于心电图可诊断的疾病类别特别丰富,目前,市面上出现较多的是针对某些特定类别的算法,尚没有看到能够按照临床诊断标准、在一定准确率标准下,提供类似医生的多标签多分类算法。本次赛事希望吸引更多优秀的算法人才,共同为心电图人工智能诊断算法的开发贡献力量。

1.2 赛题任务

针对临床标准12导联心电图数据的多标签多分类算法开展研发和竞技比拼。

选手需利用命题方提供的训练集数据,设计并实现模型和算法,能够对标准12导静息心电图进行智能诊断。需要识别的心电图包括12个类别:正常心电图、窦性心动过缓、窦性心动过速、窦性心律不齐、心房颤动、室性早搏、房性早搏、一度房室阻滞、完全性右束支阻滞、T波改变、ST改变、其它。

本赛题共分为两个关联任务:任务一为要求针对心电图输出二元(正常 v.s 异常)分类标签;任务二为针对给定的心电图输出上述12 项诊断分类的诊断结果标签。

1.3 赛题数据

心电数据的单位为mV,采样率为 500HZ,记录时长为 10 秒,存储格式为 MAT;文件中存储了 12 导联的电压信号(包含了I,II,III,aVR,aVL,aVF,V1,V2,V3,V4,V5 和 V6)

1.3.1 任务一的数据说明

数据将会分为参赛者可见标签的训练集,及不可见标签的测试集两大部分。数据均可下载。(请参见「参赛提交」——「下载」下的 2021A_T2_Task1_数据集,其包含了训练集和测试集)

其中训练数据提供 1600 条 MAT 格式心电数据及其对应诊断分类标签(“正常”或“异常”,csv 格式);测试数据提供 400 条 MAT格式心电数据。

- 数据目录

DATA |- trainreference.csv TRAIN目录下数据的LABEL

|- TRAIN 训练用的数据

|- VAL 测试数据

- 数据格式

- 12导联的数据,保存matlab格式文件中。数据格式是(12, 5000)。

- 采样500HZ,10S长度有效数据。具体读取方式参考下面代码。

- 0…12是I, II, III, aVR, aVL, aVF, V1, V2, V3, V4, V5和V6数据。单位是mV。

import scipy.io as sio

ecgdata = sio.loadmat("TEST0001.MAT")['ecgdata']

- trainreference.csv格式:每行一个文件。 格式:文件名,LABEL (0正常心电图,1异常心电图)

1.4 评价方式

二、数据预处理

!unzip -o 2021A_T2_Task1_数据集含训练集和测试集.zip > out.log

import codecs, glob, os

import numpy as np

import pandas as pd

import paddle

import paddle.nn as nn

from paddle.io import DataLoader, Dataset

import paddle.optimizer as optim

from paddlenlp.data import Pad

import scipy.io as sio

from sklearn.model_selection import StratifiedKFold

train_mat = glob.glob('./train/*.mat')

train_mat.sort()

train_mat = [sio.loadmat(x)['ecgdata'].reshape(1, 12, 5000) for x in train_mat]

test_mat = glob.glob('./val/*.mat')

test_mat.sort()

test_mat = [sio.loadmat(x)['ecgdata'].reshape(1, 12, 5000) for x in test_mat]

train_df = pd.read_csv('trainreference.csv')

train_df['tag'] = train_df['tag'].astype(np.float32)

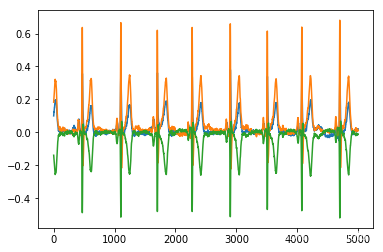

%pylab inline

plt.plot(range(5000), train_mat[0][0][0])

plt.plot(range(5000), train_mat[0][0][1])

plt.plot(range(5000), train_mat[0][0][3])

Populating the interactive namespace from numpy and matplotlib

[<matplotlib.lines.Line2D at 0x7fe0244d4dd0>]

train_df.head()

| name | tag | |

|---|---|---|

| 0 | TEST0001 | 1.0 |

| 1 | TEST0002 | 0.0 |

| 2 | TEST0003 | 1.0 |

| 3 | TEST0004 | 0.0 |

| 4 | TEST0005 | 1.0 |

print(test_mat[0].shape)

(1, 12, 5000)

class MyDataset(Dataset):

def __init__(self, mat, label, mat_dim=3000):

super(MyDataset, self).__init__()

self.mat = mat

self.label = label

self.mat_dim = mat_dim

def __len__(self):

return len(self.mat)

def __getitem__(self, index):

idx = np.random.randint(0, 5000-self.mat_dim)

# idy = np.random.choice(range(12), 9)

inputs=paddle.to_tensor(self.mat[index][:, :, idx:idx+self.mat_dim])

label=paddle.to_tensor(self.label[index])

return inputs,label

查看生成的数据

BATCH_SIZE = 30

train_ds=MyDataset(train_mat, train_df['tag'])

train_loader = DataLoader(train_ds, batch_size=BATCH_SIZE, shuffle=True)

for batch in train_loader:

print(batch)

break

[Tensor(shape=[30, 1, 12, 3000], dtype=float32, place=CUDAPlace(0), stop_gradient=True,

[[[[ 0.07076000, 0.06832000, 0.06100000, ..., -0.09028000, -0.02196000, 0.05368000],

[ 0.12687999, 0.12444000, 0.11468000, ..., 0.11224000, 0.18300000, 0.25863999],

[ 0.05612000, 0.05612000, 0.05368000, ..., 0.20252000, 0.20495999, 0.20495999],

...,

[ 0.58560002, 0.57095999, 0.55387998, ..., 0.50751996, 0.73688000, 0.99795997],

[ 0.44652000, 0.43188000, 0.42455998, ..., 0.33427998, 0.52215999, 0.73688000],

[ 0.42455998, 0.41723999, 0.40748000, ..., 0.09760000, 0.21716000, 0.36600000]]],

[[[-0.07564000, -0.06832000, -0.06100000, ..., -0.07076000, -0.07564000, -0.07808000],

[-0.05368000, -0.04880000, -0.04392000, ..., -0.07076000, -0.07076000, -0.07564000],

[ 0.02196000, 0.01952000, 0.01708000, ..., 0. , 0.00488000, 0.00244000],

...,

[ 0.00244000, 0. , 0. , ..., 0.03416000, 0.02928000, 0.01952000],

[-0.01952000, -0.02196000, -0.01708000, ..., 0.03904000, 0.03904000, 0.03172000],

[ 0.00732000, 0.00732000, 0.00976000, ..., 0.01708000, 0.01220000, 0.00244000]]],

[[[ 0. , -0.00488000, -0.00488000, ..., -0.00488000, -0.00732000, -0.00244000],

[ 0.00732000, 0.00732000, 0.00732000, ..., -0.01952000, -0.01952000, -0.01708000],

[ 0.00732000, 0.01220000, 0.01220000, ..., -0.01464000, -0.01220000, -0.01464000],

...,

[ 0.00976000, 0.00732000, 0.00976000, ..., 0.00488000, 0.00488000, 0.00732000],

[ 0.00488000, 0.00244000, 0.00732000, ..., -0.02684000, -0.02684000, -0.02440000],

[ 0.05612000, 0.05612000, 0.05368000, ..., 0.01708000, 0.01708000, 0.01708000]]],

...,

[[[ 0.05368000, 0.05368000, 0.04880000, ..., -0.03904000, -0.02440000, -0.00976000],

[-0.00976000, -0.00732000, -0.00732000, ..., -0.03172000, -0.01952000, -0.00488000],

[-0.06344000, -0.06100000, -0.05612000, ..., 0.00732000, 0.00488000, 0.00488000],

...,

[ 0.07564000, 0.06588000, 0.05856000, ..., -0.02928000, -0.02196000, -0.01464000],

[ 0.16348000, 0.15616000, 0.14640000, ..., -0.02928000, -0.02440000, -0.01464000],

[ 0.18544000, 0.18544000, 0.17812000, ..., -0.00488000, -0.00488000, -0.00488000]]],

[[[-0.01220000, -0.01220000, -0.01220000, ..., -0.03172000, -0.02928000, -0.02196000],

[-0.01220000, -0.01708000, -0.01708000, ..., -0.02684000, -0.03172000, -0.03660000],

[ 0. , -0.00488000, -0.00488000, ..., 0.00488000, -0.00244000, -0.01464000],

...,

[-0.05124000, -0.04880000, -0.04880000, ..., -0.00732000, 0.02196000, 0.08052000],

[-0.02684000, -0.02684000, -0.02684000, ..., -0.11224000, -0.10736000, -0.07808000],

[-0.02684000, -0.02684000, -0.02928000, ..., -0.04636000, -0.05612000, -0.05856000]]],

[[[-0.14396000, -0.14640000, -0.14640000, ..., -0.22691999, -0.24156000, -0.24888000],

[-0.24156000, -0.23911999, -0.24156000, ..., -0.03660000, -0.04636000, -0.05124000],

[-0.09760000, -0.09272000, -0.09516000, ..., 0.19032000, 0.19520000, 0.19764000],

...,

[-0.54412001, -0.54655999, -0.55387998, ..., -0.39039999, -0.40991998, -0.43188000],

[-0.44408000, -0.44652000, -0.45383999, ..., -0.23424000, -0.24888000, -0.26352000],

[-0.33671999, -0.33916000, -0.34160000, ..., -0.16835999, -0.19032000, -0.20984000]]]]), Tensor(shape=[30, 1], dtype=float32, place=CUDAPlace(0), stop_gradient=True,

[[1.],

[1.],

[1.],

[0.],

[0.],

[0.],

[1.],

[1.],

[0.],

[0.],

[0.],

[1.],

[0.],

[1.],

[0.],

[1.],

[1.],

[0.],

[0.],

[0.],

[1.],

[1.],

[1.],

[1.],

[1.],

[0.],

[0.],

[1.],

[0.],

[1.]])]

三、构建模型

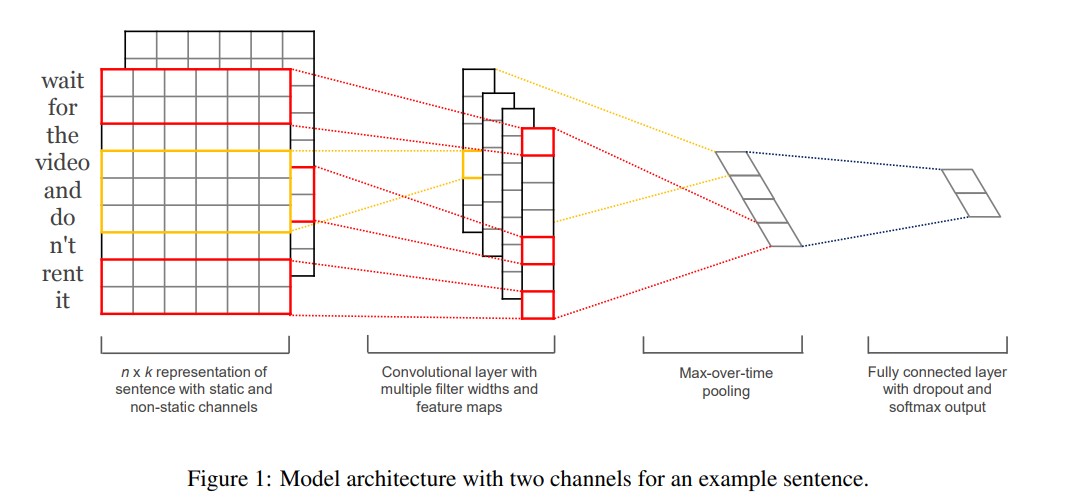

TextCNN 模型是由 Harvard NLP 组的 Yoon Kim 在2014年发表的 Convolutional Neural Networks for Sentence Classification 一文中提出的模型,由于 CNN 在计算机视觉中,常被用于提取图像的局部特征图,且起到了很好的效果,所以该作者将其引入到 NLP 中,应用于文本分类任务,试图使用 CNN 捕捉文本中单词之间的关系。

class TextCNN(nn.Layer):

def __init__(self, kernel_num=30, kernel_size=[3, 4, 5], dropout=0.5):

super(TextCNN, self).__init__()

self.kernel_num = kernel_num

self.kernel_size = kernel_size

self.dropout = dropout

self.convs = nn.LayerList([nn.Conv2D(1, self.kernel_num, (kernel_size_, 3000))

for kernel_size_ in self.kernel_size])

self.dropout = nn.Dropout(self.dropout)

self.linear = nn.Linear(3 * self.kernel_num, 1)

def forward(self, x):

convs = [nn.ReLU()(conv(x)).squeeze(3) for conv in self.convs]

pool_out = [nn.MaxPool1D(block.shape[2])(block).squeeze(2) for block in convs]

pool_out = paddle.concat(pool_out, 1)

logits = self.linear(pool_out)

return logits

class TextCNN_Plus(nn.Layer):

def __init__(self, kernel_num=30, kernel_size=[3, 4, 5], dropout=0.5,mat_dim=3000):

super(TextCNN_Plus, self).__init__()

self.kernel_num = kernel_num

self.kernel_size = kernel_size

self.dropout = dropout

self.convs = nn.LayerList([nn.Conv2D(1, self.kernel_num, (kernel_size_, mat_dim))

for kernel_size_ in self.kernel_size])

self.dropout = nn.Dropout(self.dropout)

self.linear = nn.Linear(3 * self.kernel_num, 1)

def forward(self, x):

convs = [nn.ReLU()(conv(x)).squeeze(3) for conv in self.convs]

pool_out = [nn.MaxPool1D(block.shape[2])(block).squeeze(2) for block in convs]

pool_out = paddle.concat(pool_out, 1)

logits = self.linear(pool_out)

return logits

# model = TextCNN()

# 可以尝试改变维度,看看效果

mat_dim=4000

model=TextCNN_Plus(mat_dim=mat_dim)

EPOCHS = 200

LEARNING_RATE = 0.0005

paddle.summary(model, (64, 1, 9, mat_dim))

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-4 [[64, 1, 9, 4000]] [64, 30, 7, 1] 360,030

Conv2D-5 [[64, 1, 9, 4000]] [64, 30, 6, 1] 480,030

Conv2D-6 [[64, 1, 9, 4000]] [64, 30, 5, 1] 600,030

Linear-2 [[64, 90]] [64, 1] 91

===========================================================================

Total params: 1,440,181

Trainable params: 1,440,181

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 8.79

Forward/backward pass size (MB): 0.26

Params size (MB): 5.49

Estimated Total Size (MB): 14.55

---------------------------------------------------------------------------

{'total_params': 1440181, 'trainable_params': 1440181}

四、模型训练

num_splits=5

skf = StratifiedKFold(n_splits=num_splits)

output_dir='checkpoint'

if(not os.path.exists(output_dir)):

os.mkdir(output_dir)

fold_idx = 0

for tr_idx, val_idx in skf.split(train_mat, train_df['tag'].values):

train_ds=MyDataset(np.array(train_mat)[tr_idx], train_df['tag'].values[tr_idx],mat_dim=mat_dim)

dev_ds=MyDataset(np.array(train_mat)[val_idx], train_df['tag'].values[val_idx],mat_dim=mat_dim)

Train_Loader = DataLoader(train_ds, batch_size=BATCH_SIZE, shuffle=True)

Val_Loader = DataLoader(dev_ds, batch_size=BATCH_SIZE, shuffle=True)

# model = TextCNN()

model=TextCNN_Plus(mat_dim=mat_dim)

optimizer = optim.Adam(parameters=model.parameters(), learning_rate=LEARNING_RATE)

criterion = nn.BCEWithLogitsLoss()

Test_best_Acc = 0

for epoch in range(0, EPOCHS):

Train_Loss, Test_Loss = [], []

Train_Acc, Test_Acc = [], []

model.train()

for i, (x, y) in enumerate(Train_Loader):

pred = model(x)

loss = criterion(pred, y)

Train_Loss.append(loss.item())

pred = (paddle.nn.functional.sigmoid(pred)>0.5).astype(int)

Train_Acc.append((pred.numpy() == y.numpy()).mean())

loss.backward()

optimizer.step()

optimizer.clear_grad()

model.eval()

for i, (x, y) in enumerate(Val_Loader):

pred = model(x)

Test_Loss.append(criterion(pred, y).item())

pred = (paddle.nn.functional.sigmoid(pred)>0.5).astype(int)

Test_Acc.append((pred.numpy() == y.numpy()).mean())

if epoch % 10 == 0:

print(

"Epoch: [{}/{}] TrainLoss/TestLoss: {:.4f}/{:.4f} TrainAcc/TestAcc: {:.4f}/{:.4f}".format( \

epoch + 1, EPOCHS, \

np.mean(Train_Loss), np.mean(Test_Loss), \

np.mean(Train_Acc), np.mean(Test_Acc) \

)

)

if Test_best_Acc < np.mean(Test_Acc):

print(f'Fold {fold_idx} Acc imporve from {Test_best_Acc} to {np.mean(Test_Acc)} Save Model...')

paddle.save(model.state_dict(), os.path.join(output_dir,"model_{}.pdparams".format(fold_idx)))

Test_best_Acc = np.mean(Test_Acc)

fold_idx += 1

Epoch: [1/200] TrainLoss/TestLoss: 1.7330/2.0799 TrainAcc/TestAcc: 0.4888/0.5515

Fold 0 Acc imporve from 0 to 0.5515151515151515 Save Model...

Fold 0 Acc imporve from 0.5515151515151515 to 0.5545454545454546 Save Model...

Fold 0 Acc imporve from 0.5545454545454546 to 0.5863636363636362 Save Model...

Epoch: [11/200] TrainLoss/TestLoss: 1.1149/1.1665 TrainAcc/TestAcc: 0.6012/0.5773

Fold 0 Acc imporve from 0.5863636363636362 to 0.6000000000000001 Save Model...

Fold 0 Acc imporve from 0.6000000000000001 to 0.6242424242424243 Save Model...

Fold 0 Acc imporve from 0.6242424242424243 to 0.6272727272727271 Save Model...

Epoch: [21/200] TrainLoss/TestLoss: 1.1924/1.4870 TrainAcc/TestAcc: 0.6306/0.6318

Fold 0 Acc imporve from 0.6272727272727271 to 0.6318181818181818 Save Model...

Fold 0 Acc imporve from 0.6318181818181818 to 0.6515151515151515 Save Model...

Fold 0 Acc imporve from 0.6515151515151515 to 0.6636363636363636 Save Model...

Epoch: [31/200] TrainLoss/TestLoss: 0.7791/1.0332 TrainAcc/TestAcc: 0.6810/0.6424

Fold 0 Acc imporve from 0.6636363636363636 to 0.7045454545454546 Save Model...

Epoch: [41/200] TrainLoss/TestLoss: 0.9020/3.3706 TrainAcc/TestAcc: 0.7252/0.5348

Fold 0 Acc imporve from 0.7045454545454546 to 0.7181818181818183 Save Model...

Epoch: [51/200] TrainLoss/TestLoss: 0.5962/1.0396 TrainAcc/TestAcc: 0.7539/0.7136

Fold 0 Acc imporve from 0.7181818181818183 to 0.7303030303030303 Save Model...

Fold 0 Acc imporve from 0.7303030303030303 to 0.7454545454545454 Save Model...

Epoch: [61/200] TrainLoss/TestLoss: 0.8766/0.7803 TrainAcc/TestAcc: 0.7112/0.7470

Fold 0 Acc imporve from 0.7454545454545454 to 0.746969696969697 Save Model...

Epoch: [71/200] TrainLoss/TestLoss: 0.6410/0.9276 TrainAcc/TestAcc: 0.7636/0.7152

Fold 0 Acc imporve from 0.746969696969697 to 0.7621212121212121 Save Model...

Epoch: [81/200] TrainLoss/TestLoss: 0.8297/1.0329 TrainAcc/TestAcc: 0.7260/0.7106

Epoch: [91/200] TrainLoss/TestLoss: 0.7787/1.3240 TrainAcc/TestAcc: 0.7353/0.6970

Epoch: [101/200] TrainLoss/TestLoss: 0.5585/0.9102 TrainAcc/TestAcc: 0.7853/0.6773

Fold 0 Acc imporve from 0.7621212121212121 to 0.7696969696969697 Save Model...

Epoch: [111/200] TrainLoss/TestLoss: 0.4791/0.8420 TrainAcc/TestAcc: 0.7946/0.7364

Epoch: [121/200] TrainLoss/TestLoss: 0.4775/0.7500 TrainAcc/TestAcc: 0.8027/0.7288

Fold 0 Acc imporve from 0.7696969696969697 to 0.7712121212121211 Save Model...

Epoch: [131/200] TrainLoss/TestLoss: 0.4473/0.7472 TrainAcc/TestAcc: 0.7965/0.7394

Epoch: [141/200] TrainLoss/TestLoss: 0.5836/1.0302 TrainAcc/TestAcc: 0.7783/0.7530

Epoch: [151/200] TrainLoss/TestLoss: 0.4230/1.2617 TrainAcc/TestAcc: 0.8066/0.6742

Epoch: [161/200] TrainLoss/TestLoss: 0.5813/1.0279 TrainAcc/TestAcc: 0.7791/0.7121

Epoch: [171/200] TrainLoss/TestLoss: 0.3763/0.7596 TrainAcc/TestAcc: 0.8260/0.7455

Fold 0 Acc imporve from 0.7712121212121211 to 0.7818181818181817 Save Model...

Epoch: [181/200] TrainLoss/TestLoss: 0.4017/0.7252 TrainAcc/TestAcc: 0.8357/0.7470

Fold 0 Acc imporve from 0.7818181818181817 to 0.7863636363636364 Save Model...

Epoch: [191/200] TrainLoss/TestLoss: 0.4182/0.8956 TrainAcc/TestAcc: 0.8213/0.7273

Epoch: [1/200] TrainLoss/TestLoss: 1.6985/3.0214 TrainAcc/TestAcc: 0.5097/0.4500

Fold 1 Acc imporve from 0 to 0.45 Save Model...

Fold 1 Acc imporve from 0.45 to 0.5045454545454545 Save Model...

Fold 1 Acc imporve from 0.5045454545454545 to 0.5136363636363637 Save Model...

Fold 1 Acc imporve from 0.5136363636363637 to 0.5363636363636364 Save Model...

Fold 1 Acc imporve from 0.5363636363636364 to 0.5409090909090909 Save Model...

Fold 1 Acc imporve from 0.5409090909090909 to 0.6212121212121212 Save Model...

Epoch: [11/200] TrainLoss/TestLoss: 0.8972/1.1737 TrainAcc/TestAcc: 0.6140/0.5803

Epoch: [21/200] TrainLoss/TestLoss: 0.7527/1.9002 TrainAcc/TestAcc: 0.7101/0.6227

Fold 1 Acc imporve from 0.6212121212121212 to 0.6227272727272727 Save Model...

Fold 1 Acc imporve from 0.6227272727272727 to 0.6363636363636364 Save Model...

Fold 1 Acc imporve from 0.6363636363636364 to 0.6681818181818182 Save Model...

Fold 1 Acc imporve from 0.6681818181818182 to 0.6712121212121213 Save Model...

Epoch: [31/200] TrainLoss/TestLoss: 0.7079/1.1375 TrainAcc/TestAcc: 0.7333/0.6530

Fold 1 Acc imporve from 0.6712121212121213 to 0.6939393939393941 Save Model...

Epoch: [41/200] TrainLoss/TestLoss: 0.6693/1.4935 TrainAcc/TestAcc: 0.7430/0.6439

Epoch: [51/200] TrainLoss/TestLoss: 0.7388/1.4773 TrainAcc/TestAcc: 0.7306/0.6545

Epoch: [61/200] TrainLoss/TestLoss: 0.5940/1.1551 TrainAcc/TestAcc: 0.7636/0.6561

Fold 1 Acc imporve from 0.6939393939393941 to 0.703030303030303 Save Model...

Fold 1 Acc imporve from 0.703030303030303 to 0.712121212121212 Save Model...

Epoch: [71/200] TrainLoss/TestLoss: 0.5330/0.9578 TrainAcc/TestAcc: 0.7628/0.6909

Fold 1 Acc imporve from 0.712121212121212 to 0.7151515151515151 Save Model...

Fold 1 Acc imporve from 0.7151515151515151 to 0.7181818181818183 Save Model...

Epoch: [81/200] TrainLoss/TestLoss: 0.6177/1.4838 TrainAcc/TestAcc: 0.7682/0.6515

Epoch: [91/200] TrainLoss/TestLoss: 0.4597/1.5005 TrainAcc/TestAcc: 0.8062/0.7061

Fold 1 Acc imporve from 0.7181818181818183 to 0.7287878787878787 Save Model...

Fold 1 Acc imporve from 0.7287878787878787 to 0.7333333333333333 Save Model...

Epoch: [101/200] TrainLoss/TestLoss: 0.4850/1.4562 TrainAcc/TestAcc: 0.7895/0.6864

Fold 1 Acc imporve from 0.7333333333333333 to 0.740909090909091 Save Model...

Fold 1 Acc imporve from 0.740909090909091 to 0.7424242424242423 Save Model...

Epoch: [111/200] TrainLoss/TestLoss: 0.5709/1.5192 TrainAcc/TestAcc: 0.7736/0.7121

Epoch: [121/200] TrainLoss/TestLoss: 0.5519/1.0556 TrainAcc/TestAcc: 0.7946/0.7182

Epoch: [131/200] TrainLoss/TestLoss: 0.4370/1.2170 TrainAcc/TestAcc: 0.8128/0.7273

Fold 1 Acc imporve from 0.7424242424242423 to 0.743939393939394 Save Model...

Epoch: [141/200] TrainLoss/TestLoss: 0.3916/1.0146 TrainAcc/TestAcc: 0.8422/0.7591

Fold 1 Acc imporve from 0.743939393939394 to 0.759090909090909 Save Model...

Epoch: [151/200] TrainLoss/TestLoss: 0.4538/1.2573 TrainAcc/TestAcc: 0.8140/0.7258

Epoch: [161/200] TrainLoss/TestLoss: 0.3592/1.3689 TrainAcc/TestAcc: 0.8426/0.7364

Fold 1 Acc imporve from 0.759090909090909 to 0.7666666666666667 Save Model...

Epoch: [171/200] TrainLoss/TestLoss: 0.3776/1.1285 TrainAcc/TestAcc: 0.8333/0.7030

Epoch: [181/200] TrainLoss/TestLoss: 0.4470/1.2151 TrainAcc/TestAcc: 0.8465/0.7197

Epoch: [191/200] TrainLoss/TestLoss: 0.3465/1.0512 TrainAcc/TestAcc: 0.8477/0.7394

Epoch: [1/200] TrainLoss/TestLoss: 1.4319/1.3929 TrainAcc/TestAcc: 0.5027/0.5424

Fold 2 Acc imporve from 0 to 0.5424242424242424 Save Model...

Fold 2 Acc imporve from 0.5424242424242424 to 0.5499999999999999 Save Model...

Fold 2 Acc imporve from 0.5499999999999999 to 0.562121212121212 Save Model...

Fold 2 Acc imporve from 0.562121212121212 to 0.5863636363636364 Save Model...

Fold 2 Acc imporve from 0.5863636363636364 to 0.606060606060606 Save Model...

Fold 2 Acc imporve from 0.606060606060606 to 0.6075757575757575 Save Model...

Epoch: [11/200] TrainLoss/TestLoss: 1.0302/0.8324 TrainAcc/TestAcc: 0.6016/0.5788

Fold 2 Acc imporve from 0.6075757575757575 to 0.6242424242424243 Save Model...

Fold 2 Acc imporve from 0.6242424242424243 to 0.6393939393939394 Save Model...

Epoch: [21/200] TrainLoss/TestLoss: 0.8184/0.9228 TrainAcc/TestAcc: 0.6682/0.6000

Fold 2 Acc imporve from 0.6393939393939394 to 0.6590909090909091 Save Model...

Fold 2 Acc imporve from 0.6590909090909091 to 0.6606060606060606 Save Model...

Fold 2 Acc imporve from 0.6606060606060606 to 0.6818181818181818 Save Model...

Epoch: [31/200] TrainLoss/TestLoss: 0.7347/0.8336 TrainAcc/TestAcc: 0.6810/0.6652

Epoch: [41/200] TrainLoss/TestLoss: 0.6615/0.8518 TrainAcc/TestAcc: 0.7306/0.6682

Fold 2 Acc imporve from 0.6818181818181818 to 0.7181818181818183 Save Model...

Epoch: [51/200] TrainLoss/TestLoss: 0.7626/0.9011 TrainAcc/TestAcc: 0.7101/0.6455

Epoch: [61/200] TrainLoss/TestLoss: 0.6877/0.9661 TrainAcc/TestAcc: 0.7434/0.6864

Fold 2 Acc imporve from 0.7181818181818183 to 0.7196969696969696 Save Model...

Epoch: [71/200] TrainLoss/TestLoss: 0.9444/1.0745 TrainAcc/TestAcc: 0.7411/0.6636

Fold 2 Acc imporve from 0.7196969696969696 to 0.7227272727272728 Save Model...

Epoch: [81/200] TrainLoss/TestLoss: 0.4584/0.7630 TrainAcc/TestAcc: 0.7942/0.7121

Fold 2 Acc imporve from 0.7227272727272728 to 0.7363636363636363 Save Model...

Epoch: [91/200] TrainLoss/TestLoss: 0.6479/0.7880 TrainAcc/TestAcc: 0.7624/0.7045

Fold 2 Acc imporve from 0.7363636363636363 to 0.7378787878787879 Save Model...

Epoch: [101/200] TrainLoss/TestLoss: 0.4038/0.8624 TrainAcc/TestAcc: 0.8155/0.7000

Fold 2 Acc imporve from 0.7378787878787879 to 0.740909090909091 Save Model...

Epoch: [111/200] TrainLoss/TestLoss: 0.5167/1.1825 TrainAcc/TestAcc: 0.7783/0.6394

Epoch: [121/200] TrainLoss/TestLoss: 0.4551/0.6876 TrainAcc/TestAcc: 0.8178/0.7045

Epoch: [131/200] TrainLoss/TestLoss: 0.3773/0.7628 TrainAcc/TestAcc: 0.8318/0.7076

Fold 2 Acc imporve from 0.740909090909091 to 0.756060606060606 Save Model...

Epoch: [141/200] TrainLoss/TestLoss: 0.4449/0.7816 TrainAcc/TestAcc: 0.8066/0.7121

Epoch: [151/200] TrainLoss/TestLoss: 0.3920/0.9619 TrainAcc/TestAcc: 0.8333/0.6576

Epoch: [161/200] TrainLoss/TestLoss: 0.3593/0.8076 TrainAcc/TestAcc: 0.8531/0.7061

Epoch: [171/200] TrainLoss/TestLoss: 0.3580/0.7125 TrainAcc/TestAcc: 0.8399/0.7015

Epoch: [181/200] TrainLoss/TestLoss: 0.4187/0.8865 TrainAcc/TestAcc: 0.8136/0.6970

Epoch: [191/200] TrainLoss/TestLoss: 0.4296/0.9679 TrainAcc/TestAcc: 0.8333/0.6985

Epoch: [1/200] TrainLoss/TestLoss: 1.1905/1.3540 TrainAcc/TestAcc: 0.5132/0.5288

Fold 3 Acc imporve from 0 to 0.5287878787878788 Save Model...

Fold 3 Acc imporve from 0.5287878787878788 to 0.6 Save Model...

Fold 3 Acc imporve from 0.6 to 0.6166666666666667 Save Model...

Fold 3 Acc imporve from 0.6166666666666667 to 0.6196969696969696 Save Model...

Fold 3 Acc imporve from 0.6196969696969696 to 0.6575757575757576 Save Model...

Epoch: [11/200] TrainLoss/TestLoss: 1.0034/1.4254 TrainAcc/TestAcc: 0.6140/0.5742

Fold 3 Acc imporve from 0.6575757575757576 to 0.6666666666666666 Save Model...

Epoch: [21/200] TrainLoss/TestLoss: 1.1638/1.3988 TrainAcc/TestAcc: 0.6442/0.6348

Fold 3 Acc imporve from 0.6666666666666666 to 0.6742424242424243 Save Model...

Fold 3 Acc imporve from 0.6742424242424243 to 0.6939393939393939 Save Model...

Fold 3 Acc imporve from 0.6939393939393939 to 0.7242424242424242 Save Model...

Epoch: [31/200] TrainLoss/TestLoss: 0.8169/1.3470 TrainAcc/TestAcc: 0.6907/0.6455

Fold 3 Acc imporve from 0.7242424242424242 to 0.7393939393939394 Save Model...

Epoch: [41/200] TrainLoss/TestLoss: 0.6667/1.1951 TrainAcc/TestAcc: 0.7434/0.6530

Fold 3 Acc imporve from 0.7393939393939394 to 0.7484848484848484 Save Model...

Epoch: [51/200] TrainLoss/TestLoss: 0.6451/0.9876 TrainAcc/TestAcc: 0.7527/0.6909

Fold 3 Acc imporve from 0.7484848484848484 to 0.7545454545454546 Save Model...

Epoch: [61/200] TrainLoss/TestLoss: 0.9693/1.7246 TrainAcc/TestAcc: 0.7306/0.6758

Fold 3 Acc imporve from 0.7545454545454546 to 0.7651515151515151 Save Model...

Fold 3 Acc imporve from 0.7651515151515151 to 0.7772727272727273 Save Model...

Epoch: [71/200] TrainLoss/TestLoss: 0.7805/0.7958 TrainAcc/TestAcc: 0.7531/0.7091

Epoch: [81/200] TrainLoss/TestLoss: 0.5368/0.8143 TrainAcc/TestAcc: 0.7771/0.6833

Fold 3 Acc imporve from 0.7772727272727273 to 0.7818181818181817 Save Model...

Epoch: [91/200] TrainLoss/TestLoss: 0.6720/1.3941 TrainAcc/TestAcc: 0.7775/0.7106

Epoch: [101/200] TrainLoss/TestLoss: 0.5038/0.7716 TrainAcc/TestAcc: 0.7880/0.7136

Epoch: [111/200] TrainLoss/TestLoss: 0.5754/0.9938 TrainAcc/TestAcc: 0.7829/0.6242

Epoch: [121/200] TrainLoss/TestLoss: 0.5233/1.3973 TrainAcc/TestAcc: 0.8019/0.6348

Epoch: [131/200] TrainLoss/TestLoss: 0.6141/0.7912 TrainAcc/TestAcc: 0.7919/0.7182

Epoch: [141/200] TrainLoss/TestLoss: 0.6052/1.0174 TrainAcc/TestAcc: 0.8019/0.7424

Epoch: [151/200] TrainLoss/TestLoss: 0.3852/0.6368 TrainAcc/TestAcc: 0.8364/0.7485

Epoch: [161/200] TrainLoss/TestLoss: 0.3939/0.6282 TrainAcc/TestAcc: 0.8399/0.7652

Fold 3 Acc imporve from 0.7818181818181817 to 0.7833333333333333 Save Model...

Epoch: [171/200] TrainLoss/TestLoss: 0.4331/0.9348 TrainAcc/TestAcc: 0.8171/0.7515

Epoch: [181/200] TrainLoss/TestLoss: 0.4611/0.5820 TrainAcc/TestAcc: 0.8333/0.7803

Epoch: [191/200] TrainLoss/TestLoss: 0.4302/0.8218 TrainAcc/TestAcc: 0.8384/0.7864

Fold 3 Acc imporve from 0.7833333333333333 to 0.7863636363636363 Save Model...

Fold 3 Acc imporve from 0.7863636363636363 to 0.8 Save Model...

Epoch: [1/200] TrainLoss/TestLoss: 1.1027/1.1086 TrainAcc/TestAcc: 0.5504/0.5288

Fold 4 Acc imporve from 0 to 0.5287878787878788 Save Model...

Fold 4 Acc imporve from 0.5287878787878788 to 0.5393939393939394 Save Model...

Fold 4 Acc imporve from 0.5393939393939394 to 0.5590909090909091 Save Model...

Fold 4 Acc imporve from 0.5590909090909091 to 0.5863636363636364 Save Model...

Fold 4 Acc imporve from 0.5863636363636364 to 0.5909090909090909 Save Model...

Epoch: [11/200] TrainLoss/TestLoss: 1.0410/0.9359 TrainAcc/TestAcc: 0.6186/0.5803

Fold 4 Acc imporve from 0.5909090909090909 to 0.6227272727272727 Save Model...

Fold 4 Acc imporve from 0.6227272727272727 to 0.6272727272727271 Save Model...

Fold 4 Acc imporve from 0.6272727272727271 to 0.643939393939394 Save Model...

Epoch: [21/200] TrainLoss/TestLoss: 0.8814/0.9290 TrainAcc/TestAcc: 0.6663/0.6652

Fold 4 Acc imporve from 0.643939393939394 to 0.665151515151515 Save Model...

Fold 4 Acc imporve from 0.665151515151515 to 0.6651515151515152 Save Model...

Fold 4 Acc imporve from 0.6651515151515152 to 0.6742424242424243 Save Model...

Fold 4 Acc imporve from 0.6742424242424243 to 0.6954545454545453 Save Model...

Epoch: [31/200] TrainLoss/TestLoss: 0.9937/1.2125 TrainAcc/TestAcc: 0.7027/0.6015

Fold 4 Acc imporve from 0.6954545454545453 to 0.6954545454545454 Save Model...

Fold 4 Acc imporve from 0.6954545454545454 to 0.7060606060606059 Save Model...

Epoch: [41/200] TrainLoss/TestLoss: 0.8065/1.1628 TrainAcc/TestAcc: 0.7256/0.7045

Fold 4 Acc imporve from 0.7060606060606059 to 0.706060606060606 Save Model...

Fold 4 Acc imporve from 0.706060606060606 to 0.7303030303030303 Save Model...

Epoch: [51/200] TrainLoss/TestLoss: 0.7314/0.8184 TrainAcc/TestAcc: 0.7229/0.6667

Epoch: [61/200] TrainLoss/TestLoss: 0.5739/0.7596 TrainAcc/TestAcc: 0.7643/0.6773

Fold 4 Acc imporve from 0.7303030303030303 to 0.7515151515151516 Save Model...

Epoch: [71/200] TrainLoss/TestLoss: 0.5126/0.6712 TrainAcc/TestAcc: 0.7911/0.7106

Fold 4 Acc imporve from 0.7515151515151516 to 0.7606060606060606 Save Model...

Epoch: [81/200] TrainLoss/TestLoss: 1.3434/0.8301 TrainAcc/TestAcc: 0.6922/0.7348

Epoch: [91/200] TrainLoss/TestLoss: 0.6726/0.7619 TrainAcc/TestAcc: 0.7589/0.6652

Epoch: [101/200] TrainLoss/TestLoss: 0.5097/0.8235 TrainAcc/TestAcc: 0.7795/0.7182

Epoch: [111/200] TrainLoss/TestLoss: 0.7147/0.7812 TrainAcc/TestAcc: 0.7671/0.7000

Epoch: [121/200] TrainLoss/TestLoss: 0.7623/0.7912 TrainAcc/TestAcc: 0.7682/0.6576

Epoch: [131/200] TrainLoss/TestLoss: 0.4290/0.9147 TrainAcc/TestAcc: 0.8151/0.6758

Epoch: [141/200] TrainLoss/TestLoss: 0.3888/0.7085 TrainAcc/TestAcc: 0.8310/0.7485

Epoch: [151/200] TrainLoss/TestLoss: 0.6185/0.8502 TrainAcc/TestAcc: 0.7957/0.6712

Epoch: [161/200] TrainLoss/TestLoss: 0.6964/0.8365 TrainAcc/TestAcc: 0.7725/0.7424

Epoch: [171/200] TrainLoss/TestLoss: 0.4363/0.8171 TrainAcc/TestAcc: 0.8140/0.7136

Epoch: [181/200] TrainLoss/TestLoss: 0.3293/0.6608 TrainAcc/TestAcc: 0.8616/0.7530

Epoch: [191/200] TrainLoss/TestLoss: 0.3757/0.7524 TrainAcc/TestAcc: 0.8341/0.7288

五、模型预测

test_perd = np.zeros(len(test_mat))

tta_count = 20

for fold_idx in range(num_splits):

test_ds=MyDataset(test_mat, [0]*len(test_mat),mat_dim=mat_dim)

Test_Loader = DataLoader(test_ds, batch_size=BATCH_SIZE, shuffle=False)

layer_state_dict = paddle.load(os.path.join(output_dir,"model_{}.pdparams".format(fold_idx)))

model.set_state_dict(layer_state_dict)

for tta in range(tta_count):

test_pred_list = []

for i, (x, y) in enumerate(Test_Loader):

pred = model(x)

test_pred_list.append(

paddle.nn.functional.sigmoid(pred).numpy()

)

test_perd += np.vstack(test_pred_list)[:, 0]

test_perd /= tta_count * num_splits

六、生成结果

test_path = glob.glob('./val/*.mat')

test_path = [os.path.basename(x)[:-4] for x in test_path]

test_path.sort()

test_answer = pd.DataFrame({

'name': test_path,

'tag': (test_perd > 0.5).astype(int)

}).to_csv('answer.csv', index=None)

!\rm -rf answer.csv.zip

!zip answer.csv.zip answer.csv

adding: answer.csv (deflated 80%)

测试结果:

| model | score | 备注 |

|---|---|---|

| textCNN(primary) | 0.6604 | 改变kflod策略 |

| TextCNN_Plus | 0.6765 | 改变输入的数据mat_dim的维度 |

七、改进思路

- 使用多折交叉验证,训练多个模型,对测试集预测多次。

- 在读取数据时,加入噪音,或者加入mixup数据扩增。

- 使用更加强大的模型,textcnn这里还是过于简单。

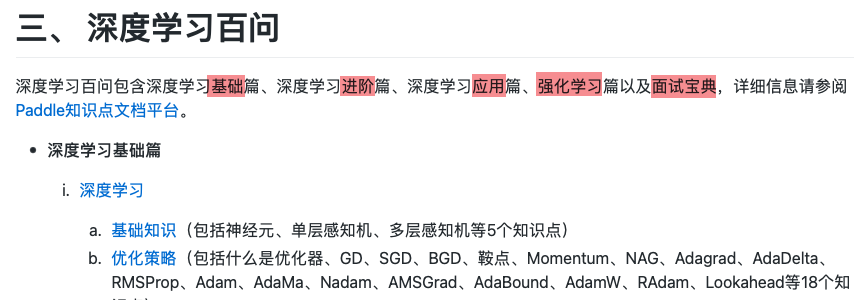

八、更多PaddleEdu信息内容

1. PaddleEdu一站式深度学习在线百科awesome-DeepLearning中还有其他的能力,大家可以敬请期待:

- 深度学习入门课

- 深度学习百问

- 特色课

- 产业实践

PaddleEdu使用过程中有任何问题欢迎在awesome-DeepLearning提issue,同时更多深度学习资料请参阅飞桨深度学习平台。

记得点个Star⭐收藏噢~~

2. 飞桨PaddleEdu技术交流群(QQ)

目前QQ群已有2000+同学一起学习,欢迎扫码加入

更多推荐

已为社区贡献1438条内容

已为社区贡献1438条内容

所有评论(0)