MRLA:通过多头循环层注意力进行跨层回溯检索

越来越多的证据表明,加强层交互可以增强深度神经网络的表示能力,而自注意力擅长通过检索查询激活的信息来学习相互依赖关系。 基于此,本文设计了一种跨层注意机制MRLA

★★★ 本文源自AlStudio社区精品项目,【点击此处】查看更多精品内容 >>>

摘要

越来越多的证据表明,加强层交互可以增强深度神经网络的表示能力,而自注意力擅长通过检索查询激活的信息来学习相互依赖关系。 基于此,我们设计了一种跨层注意机制,称为多头循环层注意力(MRLA),它将当前层的查询表示发送到之前的所有层,以从不同层次的感受野检索与查询相关的信息。 为了减少二次计算开销,还提出了一种MRLA的轻量版本。 所提出的层注意机制可以丰富包括CNNs和视觉Transformer在内的许多最先进的视觉网络的表示能力。 它在图像分类、目标检测和实例分割任务中的有效性已经得到了广泛的评价,在这些任务中可以持续观察到改进。 例如,我们的MRLA可以在Resnet-50上提高1.6%的TOP-1精度,而只引入0.16M参数和0.07B FLOPs。 令人惊讶的是,在密集的预测任务中,它可以提高3-4%的box AP和mask AP的性能。

1. MRLA

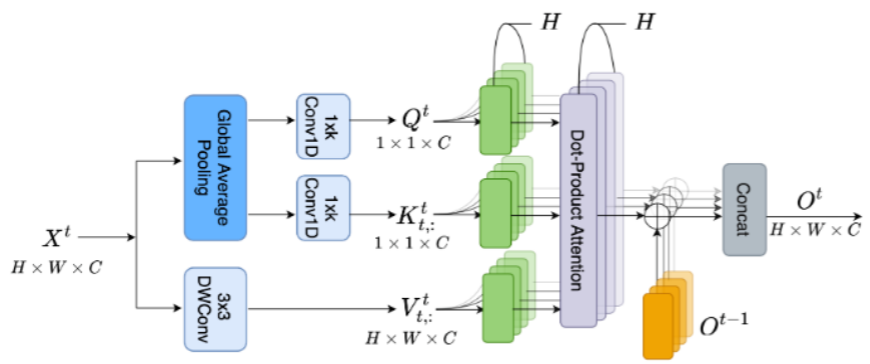

令 X t X^t Xt 为第t层的输出,那么层注意力的输入矩阵为[ X 1 X^1 X1 , X 2 X^2 X2 ,…, X t X^t Xt ],那么层注意力可以表示为:

Q t = f Q t ( X t ) ∈ R 1 × D k K t = Concat [ f K t ( X 1 ) , … , f K t ( X t ) ] and V t = Concat [ f V t ( X 1 ) , … , f V t ( X t ) ] O t = Q t ( K t ) ⊤ V t = ∑ s = 1 t Q t ( K s , : t ) ⊤ V s , : t \begin{array}{c} \boldsymbol{Q}^{t}=f_{Q}^{t}\left(\boldsymbol{X}^{t}\right) \in \mathbb{R}^{1 \times D_{k}} \\ \boldsymbol{K}^{t}=\operatorname{Concat}\left[f_{K}^{t}\left(\boldsymbol{X}^{1}\right), \ldots, f_{K}^{t}\left(\boldsymbol{X}^{t}\right)\right] \text { and } \quad \boldsymbol{V}^{t}=\operatorname{Concat}\left[f_{V}^{t}\left(\boldsymbol{X}^{1}\right), \ldots, f_{V}^{t}\left(\boldsymbol{X}^{t}\right)\right]\\ \boldsymbol{O}^{t}=\boldsymbol{Q}^{t}\left(\boldsymbol{K}^{t}\right)^{\top} \boldsymbol{V}^{t}=\sum_{s=1}^{t} \boldsymbol{Q}^{t}\left(\boldsymbol{K}_{s,:}^{t}\right)^{\top} \boldsymbol{V}_{s,:}^{t} \end{array} Qt=fQt(Xt)∈R1×DkKt=Concat[fKt(X1),…,fKt(Xt)] and Vt=Concat[fVt(X1),…,fVt(Xt)]Ot=Qt(Kt)⊤Vt=∑s=1tQt(Ks,:t)⊤Vs,:t

从上面的公式可以注意到每次执行层注意力都要计算之前所有层的Key和Value,因此本文进一步改进,对每一层使用不同的Key和Value的生成函数(这样避免了重复计算):

K t = Concat [ f K 1 ( X 1 ) , … , f K t ( X t ) ] and V t = Concat [ f V 1 ( X 1 ) , … , f V t ( X t ) ] \boldsymbol{K}^{t}=\operatorname{Concat}\left[f_{K}^{1}\left(\boldsymbol{X}^{1}\right), \ldots, f_{K}^{t}\left(\boldsymbol{X}^{t}\right)\right] \quad \text { and } \quad \boldsymbol{V}^{t}=\operatorname{Concat}\left[f_{V}^{1}\left(\boldsymbol{X}^{1}\right), \ldots, f_{V}^{t}\left(\boldsymbol{X}^{t}\right)\right] Kt=Concat[fK1(X1),…,fKt(Xt)] and Vt=Concat[fV1(X1),…,fVt(Xt)]

基于这一简化,层注意力可以改写为:

O t = ∑ s = 1 t − 1 Q t ( K s , : t ⊤ V s , : t + Q t ( K t , : t ) ⊤ V t , : t = ∑ s = 1 t − 1 Q t ( K s , : t − 1 ) ⊤ V s , : t − 1 + Q t ( K t , : t ) ⊤ V t , : t = Q t ( K t − 1 ) ⊤ V t − 1 + Q t ( K t , : t ) ⊤ V t , : t \begin{aligned} \boldsymbol{O}^{t} & =\sum_{s=1}^{t-1} \boldsymbol{Q}^{t}\left(\boldsymbol{K}_{s,:}^{t}{ }^{\top} \boldsymbol{V}_{s,:}^{t}+\boldsymbol{Q}^{t}\left(\boldsymbol{K}_{t,:}^{t}\right)^{\top} \boldsymbol{V}_{t,:}^{t}\right. \\ & =\sum_{s=1}^{t-1} \boldsymbol{Q}^{t}\left(\boldsymbol{K}_{s,:}^{t-1}\right)^{\top} \boldsymbol{V}_{s,:}^{t-1}+\boldsymbol{Q}^{t}\left(\boldsymbol{K}_{t,:}^{t}\right)^{\top} \boldsymbol{V}_{t,:}^{t}=\boldsymbol{Q}^{t}\left(\boldsymbol{K}^{t-1}\right)^{\top} \boldsymbol{V}^{t-1}+\boldsymbol{Q}^{t}\left(\boldsymbol{K}_{t,:}^{t}\right)^{\top} \boldsymbol{V}_{t,:}^{t} \end{aligned} Ot=s=1∑t−1Qt(Ks,:t⊤Vs,:t+Qt(Kt,:t)⊤Vt,:t=s=1∑t−1Qt(Ks,:t−1)⊤Vs,:t−1+Qt(Kt,:t)⊤Vt,:t=Qt(Kt−1)⊤Vt−1+Qt(Kt,:t)⊤Vt,:t

为了进一步减少计算量,本文提出了一种近似,令 Q t = λ q t ⊙ Q t − 1 Q^t = \lambda^t_q \odot Q^{t-1} Qt=λqt⊙Qt−1 ,这样可得:

Q t ( K t − 1 ) ⊤ V t − 1 = ( λ q t ⊙ Q t − 1 ) ( K t − 1 ) ⊤ V t − 1 ≈ λ o t ⊙ [ Q t − 1 ( K t − 1 ) ⊤ V t − 1 ] = λ o t ⊙ O t − 1 , \begin{aligned} \boldsymbol{Q}^{t}\left(\boldsymbol{K}^{t-1}\right)^{\top} \boldsymbol{V}^{t-1} & =\left(\boldsymbol{\lambda}_{q}^{t} \odot \boldsymbol{Q}^{t-1}\right)\left(\boldsymbol{K}^{t-1}\right)^{\top} \boldsymbol{V}^{t-1} \\ & \approx \boldsymbol{\lambda}_{o}^{t} \odot\left[\boldsymbol{Q}^{t-1}\left(\boldsymbol{K}^{t-1}\right)^{\top} \boldsymbol{V}^{t-1}\right]=\boldsymbol{\lambda}_{o}^{t} \odot \boldsymbol{O}^{t-1}, \end{aligned} Qt(Kt−1)⊤Vt−1=(λqt⊙Qt−1)(Kt−1)⊤Vt−1≈λot⊙[Qt−1(Kt−1)⊤Vt−1]=λot⊙Ot−1,

O t = λ o t ⊙ O t − 1 + Q t ( K t , : t ) ⊤ V t , : t = ∑ l = 0 t − 1 β l ⊙ [ Q t − l ( K t − l , : t − l ) ⊤ V t − l , : t − l ] \boldsymbol{O}^{t}=\boldsymbol{\lambda}_{o}^{t} \odot \boldsymbol{O}^{t-1}+\boldsymbol{Q}^{t}\left(\boldsymbol{K}_{t,:}^{t}\right)^{\top} \boldsymbol{V}_{t,:}^{t}=\sum_{l=0}^{t-1} \boldsymbol{\beta}_{l} \odot\left[\boldsymbol{Q}^{t-l}\left(\boldsymbol{K}_{t-l,:}^{t-l}\right)^{\top} \boldsymbol{V}_{t-l,:}^{t-l}\right] Ot=λot⊙Ot−1+Qt(Kt,:t)⊤Vt,:t=l=0∑t−1βl⊙[Qt−l(Kt−l,:t−l)⊤Vt−l,:t−l]

在这里, β 0 = 1 \beta_0 = 1 β0=1 , β l = λ o t ⊙ ⋯ ⊙ λ o t − l + 1 \beta_l = \lambda^t_o \odot \dots \odot \lambda^{t-l+1}_o βl=λot⊙⋯⊙λot−l+1

2. 代码复现

2.1 下载并导入所需要的包

%matplotlib inline

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

from paddle.vision.datasets import Cifar10

from paddle.vision.transforms import Transpose

from paddle.io import Dataset, DataLoader

from paddle import nn

import paddle.nn.functional as F

import paddle.vision.transforms as transforms

import os

import matplotlib.pyplot as plt

from matplotlib.pyplot import figure

from paddle import ParamAttr

from paddle.nn.layer.norm import _BatchNormBase

import math

2.2 创建数据集

train_tfm = transforms.Compose([

transforms.RandomResizedCrop(32),

transforms.RandomHorizontalFlip(0.5),

transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5)),

])

test_tfm = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5)),

])

paddle.vision.set_image_backend('cv2')

# 使用Cifar10数据集

train_dataset = Cifar10(data_file='data/data152754/cifar-10-python.tar.gz', mode='train', transform = train_tfm, )

val_dataset = Cifar10(data_file='data/data152754/cifar-10-python.tar.gz', mode='test',transform = test_tfm)

print("train_dataset: %d" % len(train_dataset))

print("val_dataset: %d" % len(val_dataset))

train_dataset: 50000

val_dataset: 10000

batch_size=256

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, drop_last=True, num_workers=4)

val_loader = DataLoader(val_dataset, batch_size=batch_size, shuffle=False, drop_last=False, num_workers=4)

2.3 标签平滑

class LabelSmoothingCrossEntropy(nn.Layer):

def __init__(self, smoothing=0.1):

super().__init__()

self.smoothing = smoothing

def forward(self, pred, target):

confidence = 1. - self.smoothing

log_probs = F.log_softmax(pred, axis=-1)

idx = paddle.stack([paddle.arange(log_probs.shape[0]), target], axis=1)

nll_loss = paddle.gather_nd(-log_probs, index=idx)

smooth_loss = paddle.mean(-log_probs, axis=-1)

loss = confidence * nll_loss + self.smoothing * smooth_loss

return loss.mean()

2.4 ResNet-MLRA

2.4.1 MLRA

class MLA_Layer(nn.Layer):

def __init__(self, input_dim, groups=None, dim_pergroup=None, k_size=None, qk_scale=None):

super().__init__()

self.input_dim = input_dim

if (groups == None) and (dim_pergroup == None):

raise ValueError("arguments groups and dim_pergroup cannot be None at the same time !")

elif dim_pergroup != None:

groups = int(input_dim / dim_pergroup)

else:

groups = groups

self.groups = groups

if k_size == None:

t = int(abs((math.log(input_dim, 2) + 1) / 2.))

k_size = t if t % 2 else t+1

self.k_size = k_size

self.avgpool = nn.AdaptiveAvgPool2D(1)

self.convk = nn.Conv1D(1, 1, k_size, padding=k_size // 2, bias_attr=False)

self.convq = nn.Conv1D(1, 1, k_size, padding=k_size // 2, bias_attr=False)

self.convv = nn.Conv2D(input_dim, input_dim, 3, padding=1, groups=input_dim, bias_attr=False)

self.qk_scale = 1 / math.sqrt(input_dim / groups) if qk_scale is None else qk_scale

self.sigmoid = nn.Sigmoid()

def forward(self, x):

b, c, h, w = x.shape

y = self.avgpool(x) # b, c, 1, 1

y = y.squeeze(-1).transpose([0, 2, 1]) # b, 1, c

q = self.convq(y)

k = self.convk(y)

v = self.convv(x)

q = q.reshape((b, self.groups, 1, c//self.groups))

k = k.reshape((b, self.groups, 1, c//self.groups))

v = v.reshape((b, self.groups, c // self.groups, h, w))

attn = (q @ k.transpose([0, 1, 3, 2])) * self.qk_scale

attn = self.sigmoid(attn).reshape((b, self.groups, 1, 1, 1))

out = v * attn

out = out.reshape((b, c, h, w))

return out

class mrla_module(nn.Layer):

def __init__(self, input_dim, dim_pergroup = 32):

super().__init__()

self.dim_pergroup = dim_pergroup

self.mla = MLA_Layer(input_dim=input_dim, dim_pergroup=self.dim_pergroup)

self.lambda_t = self.create_parameter((1, input_dim, 1, 1))

def forward(self, xt, ot_1):

atten_t = self.mla(xt)

out = atten_t + self.lambda_t.expand_as(ot_1) * ot_1 # o_t = atten(x_t) + lambda_t * o_{t-1}

return out

model = mrla_module(64)

paddle.summary(model, [(1, 64, 32, 32), (1, 64, 32, 32)])

W0213 21:07:22.189525 16015 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2

W0213 21:07:22.193380 16015 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

AdaptiveAvgPool2D-1 [[1, 64, 32, 32]] [1, 64, 1, 1] 0

Conv1D-2 [[1, 1, 64]] [1, 1, 64] 3

Conv1D-1 [[1, 1, 64]] [1, 1, 64] 3

Conv2D-1 [[1, 64, 32, 32]] [1, 64, 32, 32] 576

Sigmoid-1 [[1, 2, 1, 1]] [1, 2, 1, 1] 0

MLA_Layer-1 [[1, 64, 32, 32]] [1, 64, 32, 32] 0

===============================================================================

Total params: 582

Trainable params: 582

Non-trainable params: 0

-------------------------------------------------------------------------------

Input size (MB): 0.50

Forward/backward pass size (MB): 1.00

Params size (MB): 0.00

Estimated Total Size (MB): 1.50

-------------------------------------------------------------------------------

{'total_params': 582, 'trainable_params': 582}

2.4.2 ResNet-MRLA

class BasicBlock(nn.Layer):

expansion = 1

def __init__(

self,

inplanes,

planes,

stride=1,

downsample=None,

groups=1,

base_width=64,

dilation=1,

norm_layer=None,

):

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2D

if dilation > 1:

raise NotImplementedError(

"Dilation > 1 not supported in BasicBlock"

)

self.conv1 = nn.Conv2D(

inplanes, planes, 3, padding=1, stride=stride, bias_attr=False

)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2D(planes, planes, 3, padding=1, bias_attr=False)

self.bn2 = norm_layer(planes)

self.mrla = mrla_module(planes)

self.mrla_bn = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

out = out + self.mrla_bn(self.mrla(out, identity))

return out

class BottleneckBlock(nn.Layer):

expansion = 4

def __init__(

self,

inplanes,

planes,

stride=1,

downsample=None,

groups=1,

base_width=64,

dilation=1,

norm_layer=None,

):

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2D

width = int(planes * (base_width / 64.0)) * groups

self.conv1 = nn.Conv2D(inplanes, width, 1, bias_attr=False)

self.bn1 = norm_layer(width)

self.conv2 = nn.Conv2D(

width,

width,

3,

padding=dilation,

stride=stride,

groups=groups,

dilation=dilation,

bias_attr=False,

)

self.bn2 = norm_layer(width)

self.conv3 = nn.Conv2D(

width, planes * self.expansion, 1, bias_attr=False

)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU()

self.mrla = mrla_module(planes * self.expansion)

self.mrla_bn = norm_layer(planes * self.expansion)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

out = out + self.mrla_bn(self.mrla(out, identity))

return out

class ResNet(nn.Layer):

"""ResNet model from

`"Deep Residual Learning for Image Recognition" <https://arxiv.org/pdf/1512.03385.pdf>`_.

Args:

Block (BasicBlock|BottleneckBlock): Block module of model.

depth (int, optional): Layers of ResNet, Default: 50.

width (int, optional): Base width per convolution group for each convolution block, Default: 64.

num_classes (int, optional): Output num_features of last fc layer. If num_classes <= 0, last fc layer

will not be defined. Default: 1000.

with_pool (bool, optional): Use pool before the last fc layer or not. Default: True.

groups (int, optional): Number of groups for each convolution block, Default: 1.

Returns:

:ref:`api_paddle_nn_Layer`. An instance of ResNet model.

Examples:

.. code-block:: python

import paddle

from paddle.vision.models import ResNet

from paddle.vision.models.resnet import BottleneckBlock, BasicBlock

# build ResNet with 18 layers

resnet18 = ResNet(BasicBlock, 18)

# build ResNet with 50 layers

resnet50 = ResNet(BottleneckBlock, 50)

# build Wide ResNet model

wide_resnet50_2 = ResNet(BottleneckBlock, 50, width=64*2)

# build ResNeXt model

resnext50_32x4d = ResNet(BottleneckBlock, 50, width=4, groups=32)

x = paddle.rand([1, 3, 224, 224])

out = resnet18(x)

print(out.shape)

# [1, 1000]

"""

def __init__(

self,

block,

depth=50,

width=64,

num_classes=1000,

with_pool=True,

groups=1,

):

super().__init__()

layer_cfg = {

18: [2, 2, 2, 2],

34: [3, 4, 6, 3],

50: [3, 4, 6, 3],

101: [3, 4, 23, 3],

152: [3, 8, 36, 3],

}

layers = layer_cfg[depth]

self.groups = groups

self.base_width = width

self.num_classes = num_classes

self.with_pool = with_pool

self._norm_layer = nn.BatchNorm2D

self.inplanes = 64

self.dilation = 1

self.conv1 = nn.Conv2D(

3,

self.inplanes,

kernel_size=3,

stride=1,

padding=1,

bias_attr=False,

)

self.bn1 = self._norm_layer(self.inplanes)

self.relu = nn.ReLU()

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

if with_pool:

self.avgpool = nn.AdaptiveAvgPool2D((1, 1))

if num_classes > 0:

self.fc = nn.Linear(512 * block.expansion, num_classes)

def _make_layer(self, block, planes, blocks, stride=1, dilate=False):

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2D(

self.inplanes,

planes * block.expansion,

1,

stride=stride,

bias_attr=False,

),

norm_layer(planes * block.expansion),

)

layers = []

layers.append(

block(

self.inplanes,

planes,

stride,

downsample,

self.groups,

self.base_width,

previous_dilation,

norm_layer,

)

)

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.with_pool:

x = self.avgpool(x)

if self.num_classes > 0:

x = paddle.flatten(x, 1)

x = self.fc(x)

return x

model = ResNet(BasicBlock, depth=18, num_classes=10)

paddle.summary(model, (1, 3, 32, 32))

2.5 训练

learning_rate = 0.1

n_epochs = 100

paddle.seed(42)

np.random.seed(42)

work_path = 'work/model'

model = ResNet(BasicBlock, depth=18, num_classes=10)

criterion = LabelSmoothingCrossEntropy()

scheduler = paddle.optimizer.lr.MultiStepDecay(learning_rate=learning_rate, milestones=[30, 60, 80], verbose=False)

optimizer = paddle.optimizer.Momentum(parameters=model.parameters(), learning_rate=scheduler, weight_decay=1e-5)

gate = 0.0

threshold = 0.0

best_acc = 0.0

val_acc = 0.0

loss_record = {'train': {'loss': [], 'iter': []}, 'val': {'loss': [], 'iter': []}} # for recording loss

acc_record = {'train': {'acc': [], 'iter': []}, 'val': {'acc': [], 'iter': []}} # for recording accuracy

loss_iter = 0

acc_iter = 0

for epoch in range(n_epochs):

# ---------- Training ----------

model.train()

train_num = 0.0

train_loss = 0.0

val_num = 0.0

val_loss = 0.0

accuracy_manager = paddle.metric.Accuracy()

val_accuracy_manager = paddle.metric.Accuracy()

print("#===epoch: {}, lr={:.10f}===#".format(epoch, optimizer.get_lr()))

for batch_id, data in enumerate(train_loader):

x_data, y_data = data

labels = paddle.unsqueeze(y_data, axis=1)

logits = model(x_data)

loss = criterion(logits, y_data)

acc = paddle.metric.accuracy(logits, labels)

accuracy_manager.update(acc)

if batch_id % 10 == 0:

loss_record['train']['loss'].append(loss.numpy())

loss_record['train']['iter'].append(loss_iter)

loss_iter += 1

loss.backward()

optimizer.step()

optimizer.clear_grad()

train_loss += loss

train_num += len(y_data)

scheduler.step()

total_train_loss = (train_loss / train_num) * batch_size

train_acc = accuracy_manager.accumulate()

acc_record['train']['acc'].append(train_acc)

acc_record['train']['iter'].append(acc_iter)

acc_iter += 1

# Print the information.

print("#===epoch: {}, train loss is: {}, train acc is: {:2.2f}%===#".format(epoch, total_train_loss.numpy(), train_acc*100))

# ---------- Validation ----------

model.eval()

for batch_id, data in enumerate(val_loader):

x_data, y_data = data

labels = paddle.unsqueeze(y_data, axis=1)

with paddle.no_grad():

logits = model(x_data)

loss = criterion(logits, y_data)

acc = paddle.metric.accuracy(logits, labels)

val_accuracy_manager.update(acc)

val_loss += loss

val_num += len(y_data)

total_val_loss = (val_loss / val_num) * batch_size

loss_record['val']['loss'].append(total_val_loss.numpy())

loss_record['val']['iter'].append(loss_iter)

val_acc = val_accuracy_manager.accumulate()

acc_record['val']['acc'].append(val_acc)

acc_record['val']['iter'].append(acc_iter)

print("#===epoch: {}, val loss is: {}, val acc is: {:2.2f}%===#".format(epoch, total_val_loss.numpy(), val_acc*100))

# ===================save====================

if val_acc > best_acc:

best_acc = val_acc

paddle.save(model.state_dict(), os.path.join(work_path, 'best_model.pdparams'))

paddle.save(optimizer.state_dict(), os.path.join(work_path, 'best_optimizer.pdopt'))

print(best_acc)

paddle.save(model.state_dict(), os.path.join(work_path, 'final_model.pdparams'))

paddle.save(optimizer.state_dict(), os.path.join(work_path, 'final_optimizer.pdopt'))

2.6 实验结果

def plot_learning_curve(record, title='loss', ylabel='CE Loss'):

''' Plot learning curve of your CNN '''

maxtrain = max(map(float, record['train'][title]))

maxval = max(map(float, record['val'][title]))

ymax = max(maxtrain, maxval) * 1.1

mintrain = min(map(float, record['train'][title]))

minval = min(map(float, record['val'][title]))

ymin = min(mintrain, minval) * 0.9

total_steps = len(record['train'][title])

x_1 = list(map(int, record['train']['iter']))

x_2 = list(map(int, record['val']['iter']))

figure(figsize=(10, 6))

plt.plot(x_1, record['train'][title], c='tab:red', label='train')

plt.plot(x_2, record['val'][title], c='tab:cyan', label='val')

plt.ylim(ymin, ymax)

plt.xlabel('Training steps')

plt.ylabel(ylabel)

plt.title('Learning curve of {}'.format(title))

plt.legend()

plt.show()

plot_learning_curve(loss_record, title='loss', ylabel='CE Loss')

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/cbook/__init__.py:2349: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

if isinstance(obj, collections.Iterator):

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/cbook/__init__.py:2366: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

return list(data) if isinstance(data, collections.MappingView) else data

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-MAZDB7bW-1682510407930)(main_files/main_27_1.png)]](https://i-blog.csdnimg.cn/blog_migrate/90a0d6a3250c0f009bd034e70055c8f3.png)

plot_learning_curve(acc_record, title='acc', ylabel='Accuracy')

import time

work_path = 'work/model'

model = ResNet(BasicBlock, depth=18, num_classes=10)

model_state_dict = paddle.load(os.path.join(work_path, 'best_model.pdparams'))

model.set_state_dict(model_state_dict)

model.eval()

aa = time.time()

for batch_id, data in enumerate(val_loader):

x_data, y_data = data

labels = paddle.unsqueeze(y_data, axis=1)

with paddle.no_grad():

logits = model(x_data)

bb = time.time()

print("Throughout:{}".format(int(len(val_dataset)//(bb - aa))))

Throughout:3263

3. ResNet

3.1 ResNet

model = paddle.vision.models.resnet18(num_classes=10)

model.conv1 = nn.Conv2D(3, 64, 3, padding=1, bias_attr=False)

model.maxpool = nn.Identity()

paddle.summary(model, (1, 3, 128, 128))

3.2 训练

learning_rate = 0.1

n_epochs = 100

paddle.seed(42)

np.random.seed(42)

work_path = 'work/model1'

model = paddle.vision.models.resnet18(num_classes=10)

model.conv1 = nn.Conv2D(3, 64, 3, padding=1, bias_attr=False)

model.maxpool = nn.Identity()

criterion = LabelSmoothingCrossEntropy()

scheduler = paddle.optimizer.lr.MultiStepDecay(learning_rate=learning_rate, milestones=[30, 60, 80], verbose=False)

optimizer = paddle.optimizer.Momentum(parameters=model.parameters(), learning_rate=scheduler, weight_decay=1e-5)

gate = 0.0

threshold = 0.0

best_acc = 0.0

val_acc = 0.0

loss_record1 = {'train': {'loss': [], 'iter': []}, 'val': {'loss': [], 'iter': []}} # for recording loss

acc_record1 = {'train': {'acc': [], 'iter': []}, 'val': {'acc': [], 'iter': []}} # for recording accuracy

loss_iter = 0

acc_iter = 0

for epoch in range(n_epochs):

# ---------- Training ----------

model.train()

train_num = 0.0

train_loss = 0.0

val_num = 0.0

val_loss = 0.0

accuracy_manager = paddle.metric.Accuracy()

val_accuracy_manager = paddle.metric.Accuracy()

print("#===epoch: {}, lr={:.10f}===#".format(epoch, optimizer.get_lr()))

for batch_id, data in enumerate(train_loader):

x_data, y_data = data

labels = paddle.unsqueeze(y_data, axis=1)

logits = model(x_data)

loss = criterion(logits, y_data)

acc = paddle.metric.accuracy(logits, labels)

accuracy_manager.update(acc)

if batch_id % 10 == 0:

loss_record1['train']['loss'].append(loss.numpy())

loss_record1['train']['iter'].append(loss_iter)

loss_iter += 1

loss.backward()

optimizer.step()

optimizer.clear_grad()

train_loss += loss

train_num += len(y_data)

scheduler.step()

total_train_loss = (train_loss / train_num) * batch_size

train_acc = accuracy_manager.accumulate()

acc_record1['train']['acc'].append(train_acc)

acc_record1['train']['iter'].append(acc_iter)

acc_iter += 1

# Print the information.

print("#===epoch: {}, train loss is: {}, train acc is: {:2.2f}%===#".format(epoch, total_train_loss.numpy(), train_acc*100))

# ---------- Validation ----------

model.eval()

for batch_id, data in enumerate(val_loader):

x_data, y_data = data

labels = paddle.unsqueeze(y_data, axis=1)

with paddle.no_grad():

logits = model(x_data)

loss = criterion(logits, y_data)

acc = paddle.metric.accuracy(logits, labels)

val_accuracy_manager.update(acc)

val_loss += loss

val_num += len(y_data)

total_val_loss = (val_loss / val_num) * batch_size

loss_record1['val']['loss'].append(total_val_loss.numpy())

loss_record1['val']['iter'].append(loss_iter)

val_acc = val_accuracy_manager.accumulate()

acc_record1['val']['acc'].append(val_acc)

acc_record1['val']['iter'].append(acc_iter)

print("#===epoch: {}, val loss is: {}, val acc is: {:2.2f}%===#".format(epoch, total_val_loss.numpy(), val_acc*100))

# ===================save====================

if val_acc > best_acc:

best_acc = val_acc

paddle.save(model.state_dict(), os.path.join(work_path, 'best_model.pdparams'))

paddle.save(optimizer.state_dict(), os.path.join(work_path, 'best_optimizer.pdopt'))

print(best_acc)

paddle.save(model.state_dict(), os.path.join(work_path, 'final_model.pdparams'))

paddle.save(optimizer.state_dict(), os.path.join(work_path, 'final_optimizer.pdopt'))

3.3 实验结果

plot_learning_curve(loss_record1, title='loss', ylabel='CE Loss')

plot_learning_curve(acc_record1, title='acc', ylabel='Accuracy')

##### import time

work_path = 'work/model1'

model = paddle.vision.models.resnet18(num_classes=10)

model.conv1 = nn.Conv2D(3, 64, 3, padding=1, bias_attr=False)

model.maxpool = nn.Identity()

model_state_dict = paddle.load(os.path.join(work_path, 'best_model.pdparams'))

model.set_state_dict(model_state_dict)

model.eval()

aa = time.time()

for batch_id, data in enumerate(val_loader):

x_data, y_data = data

labels = paddle.unsqueeze(y_data, axis=1)

with paddle.no_grad():

logits = model(x_data)

bb = time.time()

print("Throughout:{}".format(int(len(val_dataset)//(bb - aa))))

Throughout:4270

Throughout:4270

4. 对比实验结果

| Model | Train Acc | Val Acc | Parameter |

|---|---|---|---|

| ResNet18 w MRLA | 0.8735 | 0.8935 | 11210514 |

| ResNet18 w/o MRLA | 0.8604 | 0.8852 | 11183562 |

总结

本文从跨层注意力出发,经过一系列的等价和近似提出了一种新的层注意力机制,在ResNet18上仅增加0.03M,准确率提高了0.8%。

参考资料

论文:Cross-Layer Retrospective Retrieving via Layer Attention(ICLR 2023)

代码:joyfang1106/MRLA

此文章为搬运

原项目链接

更多推荐

已为社区贡献1437条内容

已为社区贡献1437条内容

所有评论(0)